당신도 Fine-tuning 할 수 있습니다! with PEFT 🤗

The current trend of LM 📈

2017년 Vaswani 께서 'Attention Is All You Need'라는 논문으로 Transformer를 처음 소개하시고, 그 후 2018년에 BERT와 GPT가 나오게 되면서부터 LM(Language Model)에 대한 연구는 그 시작을 알렸다. 그리고 이 당시에 소개되었던 pre-training & fine-tuning이라는 개념은 아직까지도 널리 사용될 정도로 크나큰 LM의 framework를 이루게 되었다. 이번 포스팅에서 알아보게 될 PEFT(자세한 뜻은 조금 뒤에 알려드리겠습니다! 😄)도 이 중 fine-tuning에 관련된 method이다. PEFT에 대해 알아보기 전에 이 pre-training과 fine-tuning이 과연 정확히 무엇인지에 대해서 알아보도록 하자!

Pre-training & Fine-tuning

pre-training은 이름에서부터 알 수 있듯이 말 그대로 사전에 학습시킨다는 의미이다. 그렇다면 무엇을 사전에 학습시키는 것일까? 바로 LM을 사전에 학습시키는 것이다! 😉 이게 무슨 말일까? LM을 사전에 학습시킨다니? LM은 한 단계로 만들어지는 게 아니라 여러 단계로 만들어지는 것인 걸까? 정확하다! 현재의 LM들은 한 단계로 만들어지지 않는다. LM은 보통 pre-training과 fine-tuning의 2단계로 학습된다.

- Pre-training: LM이 전반적인 언어의 이해 능력을 기를 수 있게 하도록 방대한 양의 데이터를 사용하여 사전에 LM을 학습시켜 두는 단계 → ↑ 데이터 & ↑ 학습 시간 😅

- Fine-tuning: pre-training을 통해 사전에 학습된 모델을 구체적인 도메인에 대해서 더 잘 적응할 수 있도록 그 도메인의 데이터를 사용하여 다시 한번 파라미터를 조정해 나가면서 학습시키는 단계 → ↓ 데이터 & ↓ 학습 시간 💪

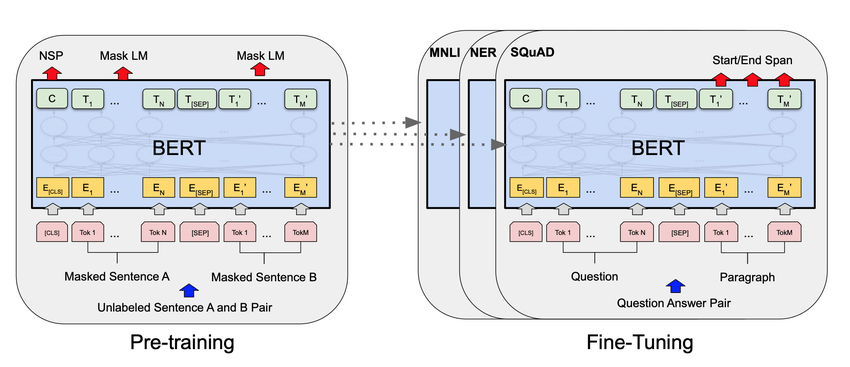

다음의 그림은 Pre-training과 Fine-tuning을 설명하는 아주 유명한 그림으로, BERT의 논문에서 선보여진 그림이다. 그림을 보면 왼쪽의 모델은 범용적으로 학습된 pre-trained model이고, 이 pre-trained model을 각 task에 대해서 fine-tuning 시킨 모델이 오른쪽의 fine-tuned model이다. 이렇게 pre-training을 통해 전반적인 LM의 성능을 다져두고, fine-tuning을 통해 구체적인 task에 대해서 모델을 특화시킴으로써 비교적 적은 비용으로 여러 task에 대해 사용할 수 있는 모델을 만들 수 있게 된 것이다! 😆 Pre-training & Fine-tuning은 그 효과와 효율성을 인정받아 아직까지도 LM의 굵은 뼈대를 이루고 있다.

What's the problem of Fine-tuning? 🤔

앞서 설명했던 것처럼 fine-tuning의 장점은 pre-training에 비해서 훨씬 더 적은 양의 데이터를 사용해서 tuning하기 때문에 훨씬 더 적은 computation을 사용하게 된다는 것이다. 실제로 비교해 봐도 pre-training에 비해 fine-tuning에 사용되는 데이터의 양은 훨씬 더 적은 양이 사용된다. 이로 인해서 fine-tuning의 비용이 상당히 줄어들 수 있는 것이다. 하지만, 완벽한 줄만 알았던 fine-tuning에도 어느 순간부터 그림자 드리우기 시작하였으니,, 😥

fine-tuning의 문제점은 모델의 사이즈와 데이터의 사이즈가 커지면서, pre-training에 비해서 훨씬 더 적은 양의 데이터를 사용한다고 해도 개개인이 tuning을 진행하기에는 컴퓨팅 자원적 제한이 생길 수밖에 없다는 것이다. 그래서 실제로 몇 백만 개의 데이터(다시 생각해 보니 적은 숫자는 아니긴 하다 🤣)에서 13B 모델을 fine-tuning 한다고 해도 여러 대의 GPU를 가지고 몇일 동안 학습을 시켜야 하는 문제가 발생한다. 😰 물론 컴퓨팅 자원이 많은 환경에서는 크게 문제가 될 것이 없지만, 필자와 같은 개인 학습자 또는 연구자의 입장에서 이러한 컴퓨팅 자원은 상당히 부담될 수밖에 없는 문제이기 때문에, 빨리 개선되어야 하는 문제이다.. 😭

효율성을 위해 만들어진 방법인 fine-tuning이 효율적이지 않다면, 이러한 문제를 어떻게 해결할 수 있을까? 이를 해결하기 위해 나온 방법이 바로 PEFT(Parameter Efficient Fine-tuning)이다!! 🤗 PEFT는 이름에서부터 알 수 있듯이 좀 더 파라미터 효율적인 fine-tuning을 통해 더욱 효율적인 fine-tuning을 할 수 있게 만드는 method이다. 이번 포스팅에서는 이 PEFT에 어떤 방법들이 존재하고, 어떤 경위로 이러한 method를 사용할 수 있는 것 인지에 대해 알아보는 시간을 가져보도록 하겠다. 자, 그럼 시작해 보도록 하자!! 🛫

Parameter Efficient Fine-Tuning 🤗

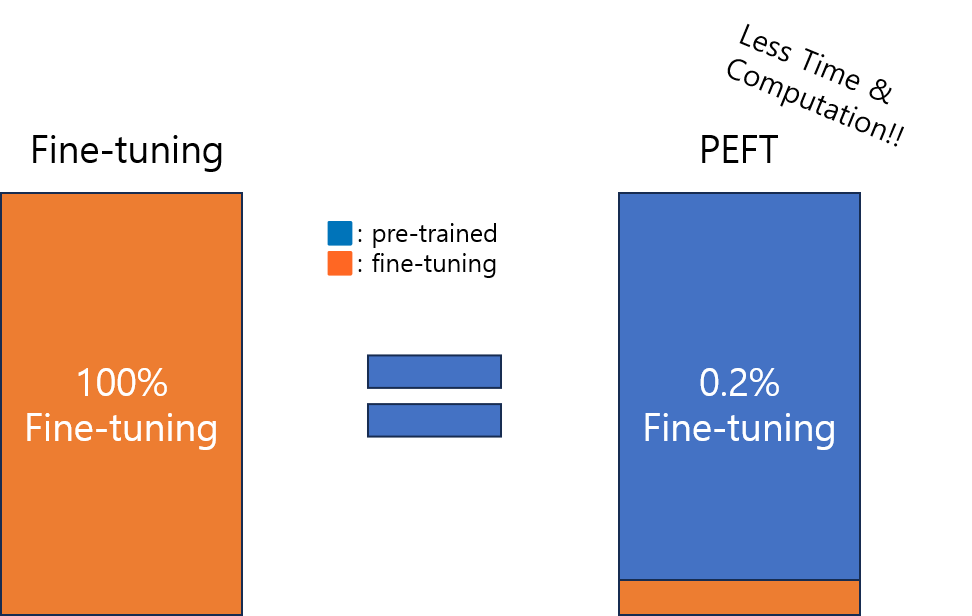

드디어 대망의 PEFT에 대해서 알아볼 시간이다. PEFT, 과연 무엇일까? PEFT는 파라미터 효율적인 fine-tuning이라고 하는데, 여기서 말하는 파라미터 효율적이라는 것은 무슨 의미일까? 자, fine-tuning의 개념에 대해서 다시 한 번 생각해 보자. fine-tuning은 기존의 pre-trained base model에 대해 domain-specific 한 데이터를 활용하여 '파라미터를 재조정'함으로써 다시 학습시키는 과정을 말한다. 앞의 문장에서 우리가 주목해야 할 부분은 '파라미터를 재조정' 한다는 부분이다. 이때, fine-tuning은 모델의 '모든' 파라미터를 재조정함으로써 좀 더 도메인에 알맞은 모델을 얻을 수 있게 된다. PEFT는 fine-tuning의 모든 파라미터를 업데이트 하는 부분을 파고들어서 굳이 모든 파라미터를 업데이트하지 않고도 fine-tuning과 비슷한 효과를 낼 수 있도록 한 방법이다. 모든 파라미터를 업데이트 하지 않고 극소수의 파라미터만을 업데이트 함으로써 좀 더 효율적으로 fine-tuning을 할 수 있게 된 것이다! 😆

이제 자연스럽게 의문이 들 수밖에 없다. '이렇게 조금의 파라미터 업데이트 만으로도 기존의 full parameter update를 하는 fine-tuning과 비슷한 성능을 보여줄 수가 있다고? 🤔' 당연하게도 들 수밖에 없는 의문이라고 생각한다. 필자도 처음 보고 정말 말도 안 되는 논리라고 생각하였기 때문이다. 😁 과연 이것이 어떻게 가능했던 건지 여러 PEFT method를 알아보면서 얘기해 보도록 하겠다! 😙

Adapter: Parameter-Efficient Transfer Learning for NLP (Houlsby et al., 2019)

거의 처음으로 Parameter Efficient 학습 방식을 제안한 Adapter는 2019년도 'Parameter-Efficient Transfer Learning for NLP' 논문을 통해서 공개되었다. Adapter가 만들어진 목표는 다음과 같다. 이때부터 PEFT의 근본적인 이념이 만들어졌다고 생각하면 될 것 같다. 😊

- 소형의 Adapter 모델을 활용하여 여러 task 변환이 용이한 모델을 만들고자 함. 🤖

- 더 적은 파라미터를 업데이트 하여 fine-tuning과 비슷한 성능을 얻고자 함. 🔻🆙

위의 목표들을 달성하기 위해 Adapter paper에서는 pre-trained weight는 frozen(동결시켜두고 업데이트를 하지 않는 상태) 시켜두고, 오직 Adapter layer만을 업데이트하는 방식을 사용하였다. 이 방식을 통해 위 두 목표를 함께 달성할 수 있었는데, 우선 기존의 pre-trained weight가 업데이트되지 않았기 때문에, Adapter만 갈아 끼우게 된다면 어느 task든 용이하게 사용될 수 있고, pre-trained weight가 frozen 되어 있고, Adapter만을 업데이트하기 때문에 훨씬 더 적은 파라미터를 업데이트하게 된다! 🙂 그렇다면 이제 정확히 Adapter가 무엇인지에 대해 알아보도록 하자!

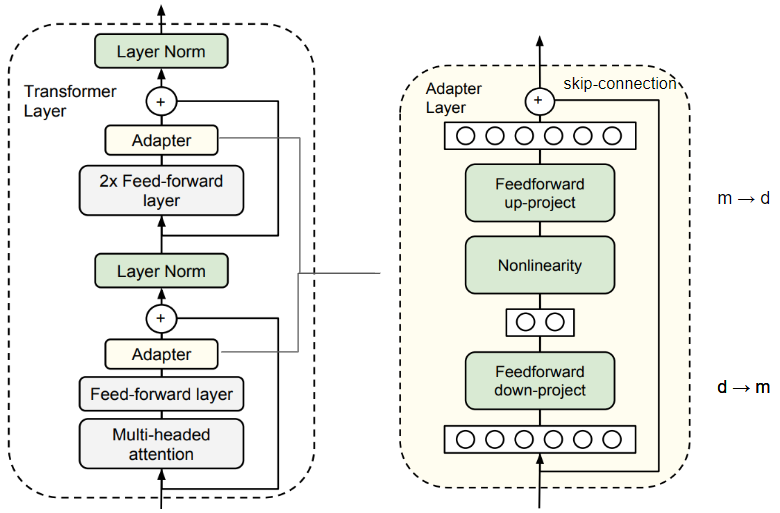

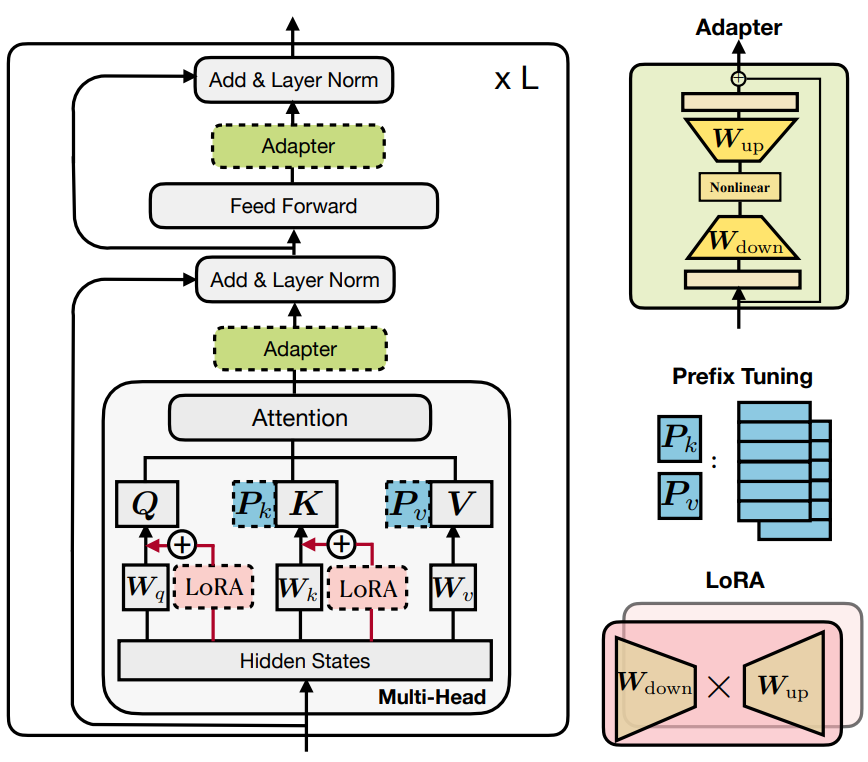

Adapter는 따로 거창한 무언가가 있는 것은 아니고, 그 실상은 Transformer layer 중간에 추가되는 하나의 작은 레이어일 뿐이다. 😁 그렇다면 이 하나의 레이어가 어떻게 파라미터 효율적인 fine-tuning을 할 수 있게 만드는 것일까? 다음의 그림을 보면 알 수 있듯이 Adapter layer는 총 3개의 레이어로 이루어져 있는데, 'Feed-forward down-projection', 'Non-linear layer', 'Feed-forward up-projection'이다. 이때 down-projection을 통해 기존의 d 차원을 smaller m 차원으로 변환하고, 비선형 레이어를 거친 다음에, 다시 up-projection을 통해 smaller m 차원에서 기존의 d 차원으로 변환하는 것이다. 이 같은 과정을 통해 더욱 적은 수의 파라미터를 업데이트할 수 있었던 것이다! 😉

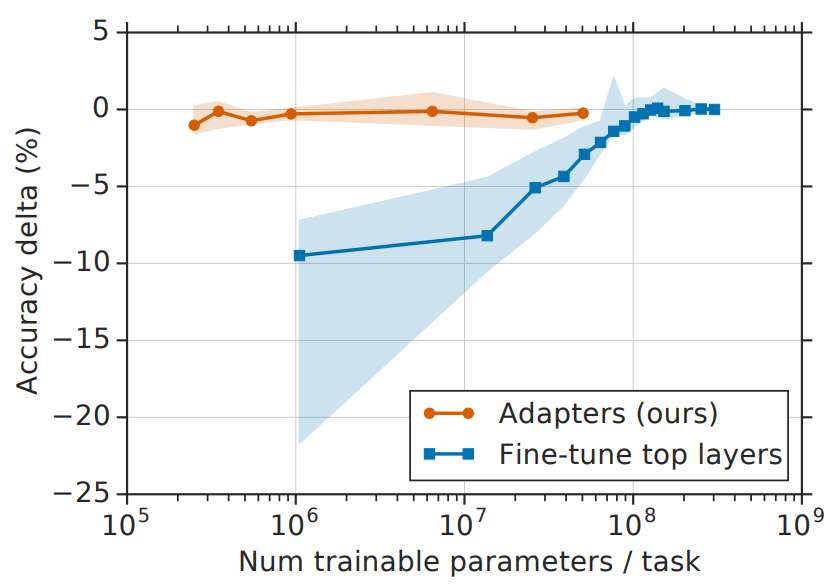

Adapter는 기존 모델의 2~3%에 불과하는 파라미터만을 업데이트 했음에도 불구하고, GLUE 벤치마크에서 기존 BERT_LARGE에 0.4% 밖에 뒤지지 않는 성능을 보여줬다! 😮 정말이지 엄청난 결과가 아닐 수가 없다! 이러한 결과는 지금까지의 fine-tuning에 대한 전반적인 생각을 뒤집어엎는 결과였다. 결과적으로 Adapter는 확실히 파라미터 효율적으로 fine-tuning의 성능에 버금가는 성능을 보여주었다.

Prefix-Tuning: Optimizing Continuous Prompts for Generation (Li & Liang, 2021)

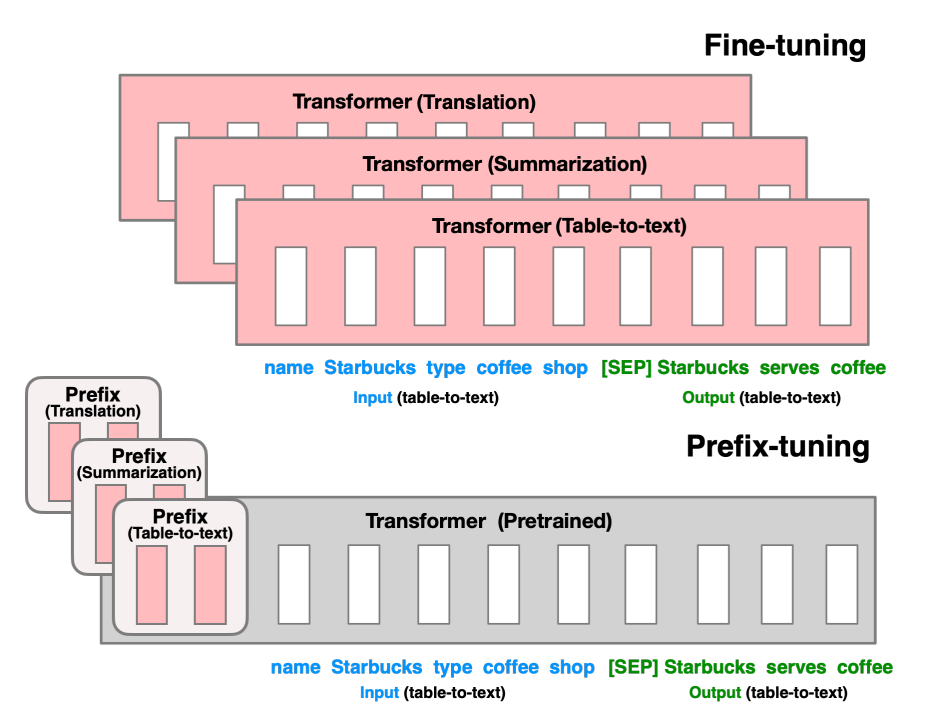

Adapter의 뒤를 이은 논문은 Prefix-Tuning이다(실제로 바로 후속으로 나온 논문인지는 잘 모르나, 필자는 Adapter 이후에 Prefix-Tuning을 읽긴 했습니다! 😅). Prefix-Tuning은 2021년도 'Prefix-Tuning: Optimizing Continuous Prompts for Generation' 논문에서 소개되었다. Prefix-Tuning도 Adapter와 마찬가지로 기존의 fine-tuning은 너무 많은 파라미터 튜닝을 필요로 하고, 하나의 task 당 하나의 full fine-tuned model을 저장해야 한다는 사실을 지적하면서 이러한 문제를 개선하기 위해 prefix만을 튜닝하는 Prefix-Tuning을 제안하였다.

그렇다면 여기서 말하는 prefix란 무엇일까? 우선 GPT-2와 GPT-3에 대해 먼저 생각해보자. 이 두 모델은 few-shot을 활용하였다. few-shot을 하기 위해서는 몇 가지 example을 주어야 하는데, 이때 몇 가지 example과 함께 주어지는 약간의 instruction 같은 것을 prefix라고 생각하면 된다. (ex. 'input을 이용하여 input에 대해 설명하시오.') 이렇게 주어지는 prefix만을 튜닝하고, pre-trained model은 frozen 시켜둔 채, 좀 더 input을 잘 활용하여 더 나은 output을 제공하는 모델을 만들기 위한 방법이 바로 Prefix-Tuning인 것이다! 🙂

이렇게 얻어진 Prefix-Tuning의 성능은 어땠을까? 무려 기존 모델의 0.1%에 해당하는 파라미터를 튜닝하고도 오히려 기존의 모델을 압도하는 성능을 보여주기도 하였다! 😮 실험 결과를 보여주면서 논문에서는 다음과 같이 설명한다.

PLM의 weight는 frozen 되어 untouched 되었기 때문에, general purpose corpora에서

얻어진 능력을 온전히 사용할 수 있어서 성능이 개선됌

LoRA: Low-Rank Adaptation of Large Language Models (Hu et al., 2021)

혹시 직접 LM을 fine-tuning 해보거나 LM 관련 프로젝트를 진행해 본 사람이라면, LoRA는 어디선가 간간히 들어볼 수 있었을 것이라고 생각한다. 그만큼, 현재도 많이 사용되는 PEFT method 중 하나이며, 이 LoRA를 베이스로 해서 양자화를 적용한 QLoRA도 많이 사용되고 있기 때문이다! 😉 그렇다면 들어보긴 들어봤는데,, 정확히 이 LoRA가 무엇일까? 🤭 LoRA에 대해 차근차근 알아가 보도록 하자!

Low Intrinsic Dimension of LM

LoRA는 어떤 아이디어를 베이스로 해서 개발된 method일까? LoRA가 영감을 받은 논문은 2020년에 발표된 논문인 'Intrinsic Dimensionality Explains the Effectiveness of Training Language Model Fine-tuning' (Aghajanyan et al., 2020) 이다. 이 논문에서는 모델의 intrinsic dimension(내재적 차원)에 대해서 얘기하는데, 이 내재적 차원은 이 차원을 tuning 하는 것만으로도 전체 파라미터를 tuning하는 것만큼의 효과가 있는 차원을 의미한다. 한 마디로 행렬에서 rank 같은 개념이라고 생각하면 된다. 이 rank를 사용해서 행렬 전체를 만들어낼 수 있는 것처럼 이 rank들을 tuning 하여 전체 행렬을 얻을 수 있는 것과 같이 말이다.

이러한 아이디어에서 영감을 받아 LoRA는 전체 파라미터를 업데이트하는 대신에, low-rank만을 업데이트하는 방식을 사용한다. rank는 앞서도 말했던 것처럼 행렬을 이루는 기저들을 의미한다.

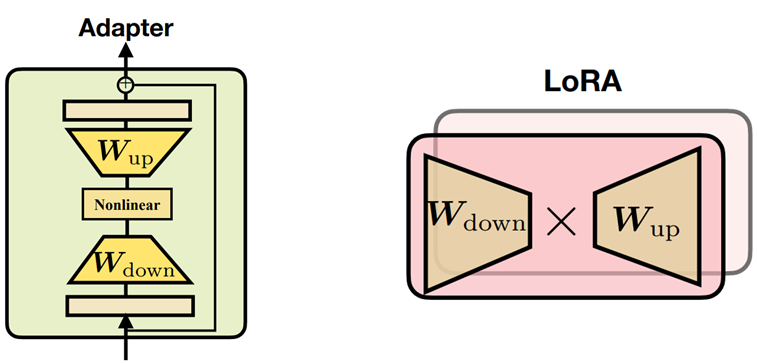

Adapter vs. LoRA

LoRA에 대해 자세하게 알아보기 전에 LoRA와 Adapter를 한 번 비교해 보도록 하자. LoRA에 대해 알아보기도 전에 LoRA의 구조를 보여주면서 Adapter와 비교를 한다니, 너무하다고 생각할 수도 있지만, 정말 중요한 점이 있기 때문에 먼저 보여주고 시작해보려 한다! 😅 자자, 다음의 그림을 보면 두 method는 모두 down-projection과 up-projection을 활용한다. 이 둘은 상당히 구조가 비슷한데, 앞서 설명한 Adapter를 떠올리면서 이해해 본다면 좀 더 쉽게 LoRA를 이해할 수 있기 때문에, 이렇게 설명하기도 전에 먼저 구조까지 보여주면서 얘기를 꺼내봤다! 😊

LoRA: Low-Rank Adaptation

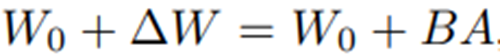

LoRA도 다른 method들과 마찬가지로 PLM은 frozen 시켜두고, adapter 부분만을 튜닝하는 방식으로 작동한다. 이때 adapter는 오직 low-rank만을 업데이트하는 방식으로 좀 더 효율적 및 효과적으로 업데이트를 진행한다. 이때 LoRA의 tuning 과정을 수식으로 나타내면 다음과 같다. 다음 수식에서 $W_{0}$은 기존의 PLM weight를 의미하고, $\Delta W$는 adapter 부분에서 업데이트된 weight를 의미한다. 이 adapter 부분은 A와 B로 나뉘어 있는데 각각은 down-projection과 up-projection이다. 한 마디로, LoRA는 기존의 PLM weight에 adapter 부분의 updated weight를 더하는 방식인 것이다! 😊

여기에 이제 input $x$를 추가하게 되면 최종 output $h$를 얻을 수 있는 것이다.

LoRA Experiment Results

LoRA의 실험 결과를 살펴보면, 상당히 적은 양의 파라미터 업데이트로 어떨 때는 기존의 fine-tuning보다 더 나은 성능을 보여줄 때도 있었다! 😮 이제 이 정도로는 별로 놀랍지도 않을 만큼 적은 수의 파라미터 업데이트만으로도 fine-tuning에 버금가는 성능을 보여줄 수 있다는 것을 알게 되었다.

실제 LoRA paper에서는 더 많은 실험을 통해 LoRA를 어느 부분에 적용해야 성능이 더 좋고, 최적의 rank 수가 얼마인지에 대해서 자세하게 분석해 보는데, 이번 포스팅에서는 따로 그 부분을 다뤄보도록 하지는 않겠다. 양해 부탁드립니다,, 🥲

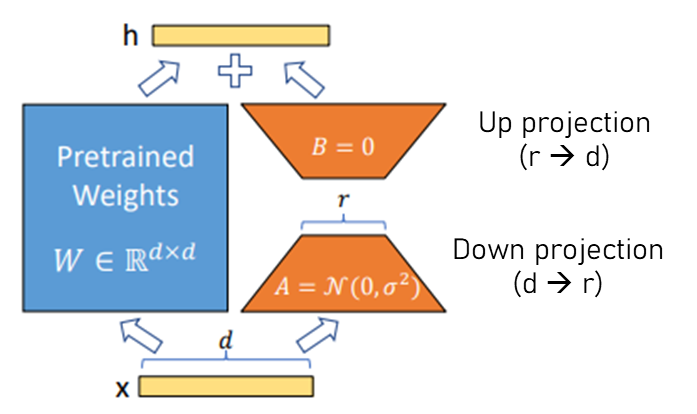

Overall View of PEFT Methods

이렇게 해서 여러 PEFT method에 대해서 전반적으로 알아보았다. 물론 Adapter, Prefix-Tuning, LoRA 외에도 더 많은 PEFT method(P-Tuning, (IA)3, etc.)가 존재하나, 다 알아볼 수 없기에, 굵직한 method들에 대해서만 알아보았다! 😅 이렇게 해서 지금까지 알아본 Adapter, Prefix-Tuning, LoRA를 종합적으로 살펴보면 다음의 그림과 같다.

So, WHY?? Why PEFT performs well?? 🧐

사실 가장 중요한 사실에 대해서 말하지 않았는데, 그래서 왜! 왜? 왜 PEFT method들이 잘 되는 것일까? full fine-tuning의 성능과 비슷한 성능을 보여줄 뿐만 아니라 오히려 능가하는 성능을 보여주기도 했는데, 도대체 어떻게 그럴 수 있었던 것일까? 이 부분에 대해서는 필자의 생각이 함유되어 있는 이유를 얘기해보고자 한다.

첫 번째로 PEFT가 성능이 좋았던 이유는 'PLM을 건드리지 않았기 때문'이라고 생각한다. PLM의 weight는 general purpose corpora로부터 학습된 매우 퀄리티 좋은 능력을 가지고 있는데, 이 능력은 fine-tuning을 거치면서 어느 특정 도메인에 특화되도록 변해간다. 이 과정에서 PLM이 가지고 있던 퀄리티 좋은 능력이 와해되기 때문에, 이 PLM에 영향을 적게 끼친 PEFT method가 좋은 성능을 보일 수 있었다고 생각한다.

두 번째로 지금의 fine-tuning은 불필요할 정도로 '지나치게 많은 양의 파라미터를 업데이트'하고 있기 때문이라고 생각한다. LoRA에서 봤던 것처럼 실제 파라미터에서 중요한 파라미터들만을 업데이트하는 것만으로도 full fine-tuning에 필적하는 성능을 얻을 수 있다고 한다. 그렇다면 굳이 모든 파라미터를 업데이트할 필요 없이 이렇게 더욱 중요한 파라미터만을 업데이트하는 게 더 낫지 않을까? 물론 확실하게 알 수는 없으나, 필자는 이러한 이유로 지금의 fine-tuning이 불필요할 정도로 많은 양의 파라미터를 업데이트하기 때문에, 적지만 중요한 파라미터를 업데이트하는 PEFT가 좋은 성능을 보였다고 생각한다.

물론 이 주장에는 오류가 있을 수도 있다. 만약 잘못된 부분이나 실제로 확인된 부분들이 존재한다면, 댓글로 알려줄 수 있길 바란다! 😊

How to use PEFT Methods? 🫤

이렇게 해서 확실히 PEFT Method가 파라미터 효율적으로 fine-tuning을 진행할 수 있게 해 준다는 사실을 알 수 있었다. 그러면 이제는 내가 지금까지 해보고 싶었지만, 컴퓨팅 자원의 한계에 부딪혀서 그러지 못했던 LM의 fine-tuning을 PEFT를 활용해서 직접 해볼 시간이다! 🤓

다행히도 직접 구현해서 사용할 필요 없이 우리의 HuggingFace의 PEFT library에 대부분의 PEFT method들이 구현되어 있다. 따라서 라이브러리를 활용하여 PEFT를 진행할 수 있다! 정확한 방법은 다음의 링크에서 확인할 수 있길 바란다! 😊

https://huggingface.co/docs/peft/index

PEFT

🤗 Accelerate integrations

huggingface.co

One ray of hope, PEFT! ✨

이 글의 마지막을 보는 여러분께 마지막으로 질문드리고 싶습니다. PEFT를 보면서 여러분은 어떤 생각을 하셨습니까? 우선 필자의 생각부터 먼저 말해보자면, 필자는 이전 포스팅에서도 언급했던 것처럼 컴퓨팅 자원이 상당히 제한되어 있는 학생이다.. 따라서 새로운 모델들이 나온다고 해도 써볼 엄두조차 못 내고 남들이 fine-tuning 해서 내놓은 모델들을 바라보기만 급급했었다. 그러면 그럴수록 새로운 모델을 fine-tuning해서 사용해보고 싶은 마음은 점점 커져만 갔고, 이러한 마음을 해결해 줬던 것은 다른 무엇도 아닌 PEFT였다. PEFT 덕분에 full fine-tuning에 비해 상당히 적은 비용으로 모델을 튜닝시킬 수 있었기 때문이다. 이런 필자의 입장에서는PEFT가 정말 '한 줄기 희망'이었다고 생각한다. 😊

물론 이러한 생각은 각자의 입장마다 다르다고 생각한다. 컴퓨팅 자원이 충분한 입장에서는 굳이 PEFT를 활용하지 않고, full fine-tuning을 해도 되기 때문이다. 하지만, 학생이나 연구자의 입장에서 PEFT는 정말 큰 도움이 된다고 생각한다. 그리고 필자는 어느 한 분야가 발전하기 위해서는 그 분야에 대한 접근성과 확장성이 모두 갖춰줘야 한다고 생각한다. 지금까지의 NLP는 LLM으로 인해 접근성과 확장성 모두 제한을 받았다고 생각하는데, Open-source LM & PEFT 등을 통해서 다시금 접근성과 확장성을 되찾아왔다고 생각한다. 😊 앞으로 이 분야에 무궁무진한 발전들이 일어날 수 있도록 빌며 포스팅을 마쳐봅니다! 읽어주신 모든 분께 감사드립니다!