GPT-4도 잘 못한 API 호출을 한다고?!? - Gorilla🦍: Large Language Model Connected with Massive APIs 논문 리뷰

The overview of this paper

LLM은 최근에 엄청 발전했으나, 이들의 API 호출을 통한 효과적인 툴 사용에 대한 잠재성은 만족되지 않은 채 남아있다. 이 논문에서는 API 호출 작성에서 GPT-4의 성능을 능가하는 fine-tuned LLaMA-based model인 Gorilla🦍를 소개하였다. Gorilla는 document retriever와 함께 사용될 때, test-time 문서 변화에 적응하기 위한 강력한 능력을 보여주고, 유연한 사용자 업데이트 또는 버전 변화를 가능하게 해 주었다. 이것은 LLM을 direct 하게 prompting 할 때 일반적으로 맞닥뜨리는 hallucination의 문제점을 상당히 완화하였다. 또한 논문에서는 Gorilla의 능력을 평가하기 위해 만들어진 HugguingFace, TorchHub, TensorHub API를 포함하는 데이터셋인 API Bench도 제안하였다.

Table of Contents

1. Introduction

2. Methodology

3. Evaluation

1. Introduction

논문에서는 API와 API 문서를 사용해서 크고, ovelapping 하고, 변화하는 tool set로부터 LLM이 정확하게 API를 선택하도록 해주기 위해 Self-Instruct fine-tuning과 retrieval의 사용을 탐구하였다. 그리고 복잡하고 종종 overlapping 기능을 가지는 API의 거대 corpus인 API Bench도 만들었다. 또한 Self-Instruct를 사용해서 API 당 10개의 사용자 question prompt를 생성하였다. 따라서 데이터셋에서 각 entry는 instruction 참조 API 쌍이 된다. 생성된 API의 정확도를 평가하기 위해 일반적인 AST sub-tree 매칭 기술을 채택하였다. 논문에서는 LLM에 대한 functional 정확도와 hallucination 문제를 해당 정확도를 기록하면서 확인하였다.

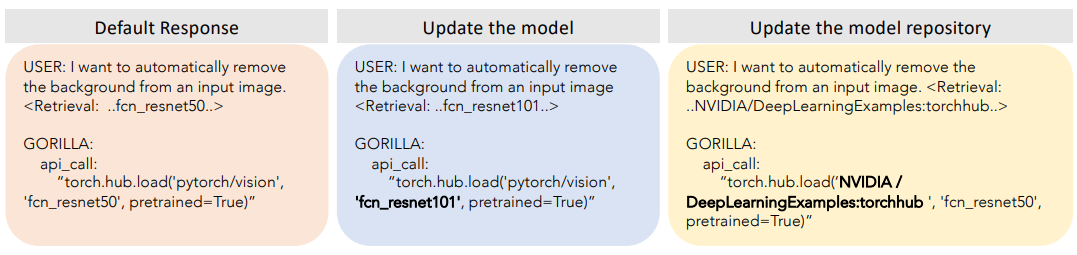

그다음에 논문에서는 LLaMA-7B-based model을 API Bench 데이터셋을 사용한 document retrieval과 함께 fine-tune 해서 Gorilla를 얻었다. Gorilla는 API 기능 정확도 뿐만 아니라 hallucination 오류를 줄이는 측면에서 GPT-4를 상당히 능가하였다. 그림 1은 예시 output을 보여준다. 추가적으로 Gorilla의 retrieval-aware training은 모델이 API 문서 변화에 적응할 수 있게 해 주었다.

2. Methodology

2-1. Dataset Collection

데이터셋 수집을 위해 HuggingFace의 'The Model Hub', PyTorchHub, TensorFlowHub Model에 대한 모든 online model card를 꼼꼼히 기록하였다.

API Documentation. HuggingFace Hub, TensorFlow Hub, Torch Hub로부터 얻은 1,645개의 API 호출에 대해 모델 카드를 다음과 같이 변환하였다: {domain, framework, functionality, api_name, api_call, api_arguments, environment_requirements, example_code, performance, and description.}. 이러한 필드는 ML 도메인 내의 API 호출을 넘어 RESTful API를 포함하여 다른 도메인으로 일반화하기 위해 선택한다.

Instruction Generation. 인조 instruction data를 생성하기 위해 GPT-4를 사용하여 Self-Instruct를 진행하였다. 논문에서는 3개의 in-context example을 참조 API 문서와 함께 제공하고, 모델에게 API를 불러오는 real-world 사용 케이스를 생성하도록 task를 시킨다. 1,645개의 API datapoint의 각각에 대해 총 10개의 instruction-API 쌍을 생성하기 위해 6개의 해당 instruction example 중 3개를 샘플링하였다.

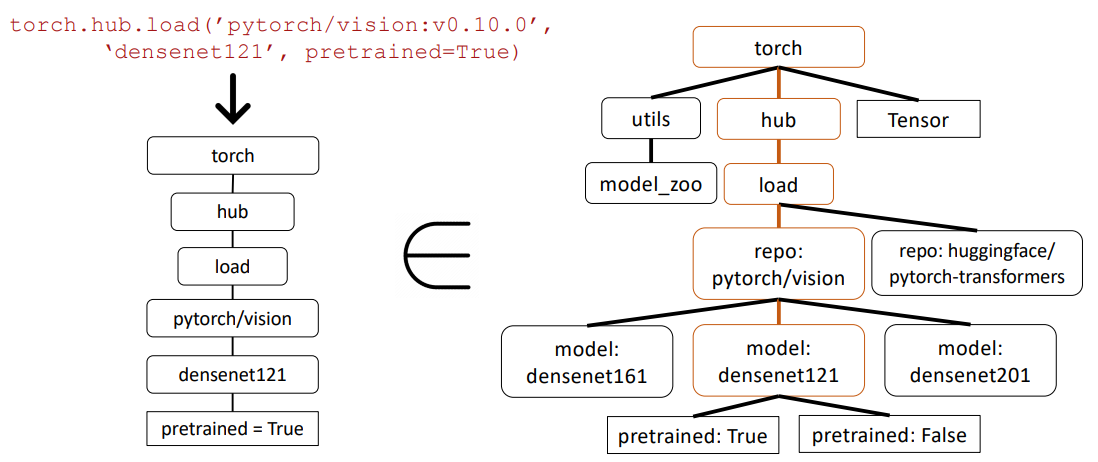

2-2. Gorilla

구체적으로 API 호출에 대해 retrieve-aware 하게 fine-tune 된 LLaMA-7B model이 Gorilla이다. 그림 3에서 보이는 것처럼, {instruction, API} 쌍을 생성하기 위해 Self-Instruct를 사용하였다. LLaMA를 fine-tune 하기 위해 이것을 user-agent chat-style 대화로 변경하였다. 여기서 각 데이터 포인트는 사용자와 에이전트에 대해 각각 한 라운드의 대화이다. 그다음에, 표준 instruction fine-tuning을 base LLaMA-7B model에 대해 수행하였다. 실험을 위해 논문에서는 retriever를 사용하고 사용하지 않는 Gorilla를 학습시켰다.

API Call with Contraints. API 호출은 종종 내재적 제약과 함께 오게 된다. 이러한 제약은 LLM이 API의 기능을 이해할 뿐만 아니라 서로 다른 제약 파라미터에 따라 호출을 카테고리화한다. LLM은 사용자의 함수적 설명을 이해할 수 있어야 할 뿐만 아니라, 요청 안에 임베딩되어 있는 다양한 제약을 추론해야 할 필요가 있다. 따라서 모델은 그저 API 호출의 기본적 기능을 이해하는 것은 충분하지 않고, 이러한 호출을 동반한 제약의 복잡한 풍경을 다룰 수 있는 능력도 가지고 있어야 한다. 이러한 관찰은 API에 대해 LLM을 필수적으로 fine-tune 해야 할 필요가 있어짐을 보여준다.

Retriever-Aware training. retriever와 함께 학습을 하기 위해 instruction-tuned 데이터셋을 user prompt에 추가적인 "Use this API documentation for reference: <retrieved_API_doc_JSON>"을 추가한다. 이를 통해 논문에서는 LLM이 초반부 질문에 응답하기 위해 질문의 후반부를 분석하기 위해 가르치는 것을 목표로 삼았다. 이것은 다음과 같은 효과를 준다.

- test-time 변화에 적응하게 만들어줌

- In-Context Learning으로부터 성능을 개선시킴

- Hallucination error를 줄임

Gorilla Inference. Gorilla의 추론을 위한 prompt는 2개의 모드를 가진다: zero-shot & with retrieval.

- zero-shot setting: 이 prompt는 Gorilla LLM에 들어가게 되고, 그다음에 task와 목표를 성취하는데 도움을 주는 API 호출을 반환함

- with retrieval: retriever는 API 데이터베이스에 저장되어 있는 가장 최신 유형의 API 문서를 검색함. 그 다음에 사용자 prompt와 연결됨.

2-3. Verifying APIs

Gorilla의 성능을 평가하기 위해 수집한 데이터를 사용해서 이들의 기능적 동등함을 비교하였다. 데이터셋의 어떤 API가 LLM 호출인지 추적하기 위해 AST 트리 매칭 전략을 채택하였다. 이때 후보 API가 참조 API의 sub-tree면 이것은 API가 데이터셋에 사용된다는 것이다.

hallucination은 정의하기가 어렵기 때문에 AST 트리 매칭을 사용해서 정의하였다. 논문에서는 hallucination이 데이터베이스에서 어떤 API의 sub-tree도 아닌 API 호출로 정의된다고 하였다. 이것은 완전히 상상으로 만들어낸 툴을 hallucination으로 고려한다는 것이다.

AST Sub-Tree Matching. 논문에서는 데이터셋에서 어떤 API가 LLM 호출인지를 판별하기 위해 AST sub-tree matching을 수행하였다.

3. Evaluation

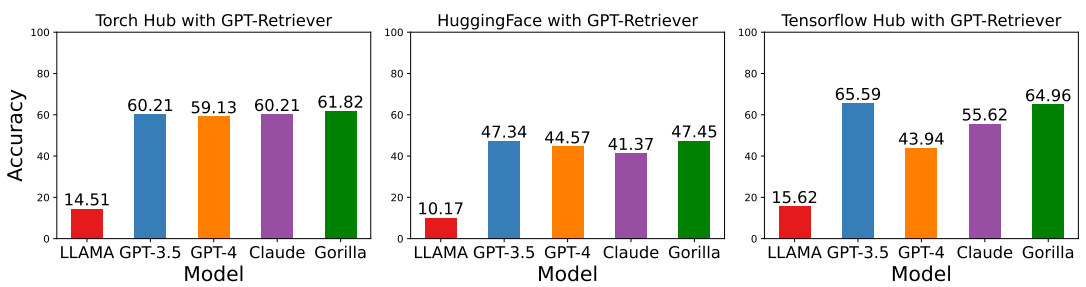

논문에서는 수집된 데이터셋에서 Gorilla와 다른 모델을 벤치마킹하고, 서로 다른 검색 method가 API 호출을 만드는데 모델의 성능에 어떻게 영향을 미치는지를 탐구하였다.

Baselines. Gorilla와 다른 SoTA 모델들을 zero-shot 세팅에서 비교하였다: GPT-4, GPT-3.5-Turbo, Claude, LLaMA-7B.

Retrievers. zero-shot은 retriever이 사용되지 않는 시나리오를 언급한다. 그래서 모델에 대한 유일한 input은 사용자의 자연어 prompt이다. retrieval 중에 사용자의 쿼리를 사용해서 인덱스를 검색하고, 가장 연관된 API를 가지고 온다. 이 API는 LLM에게 쿼리 하기 위해 사용자의 prompt와 함께 연결된다.

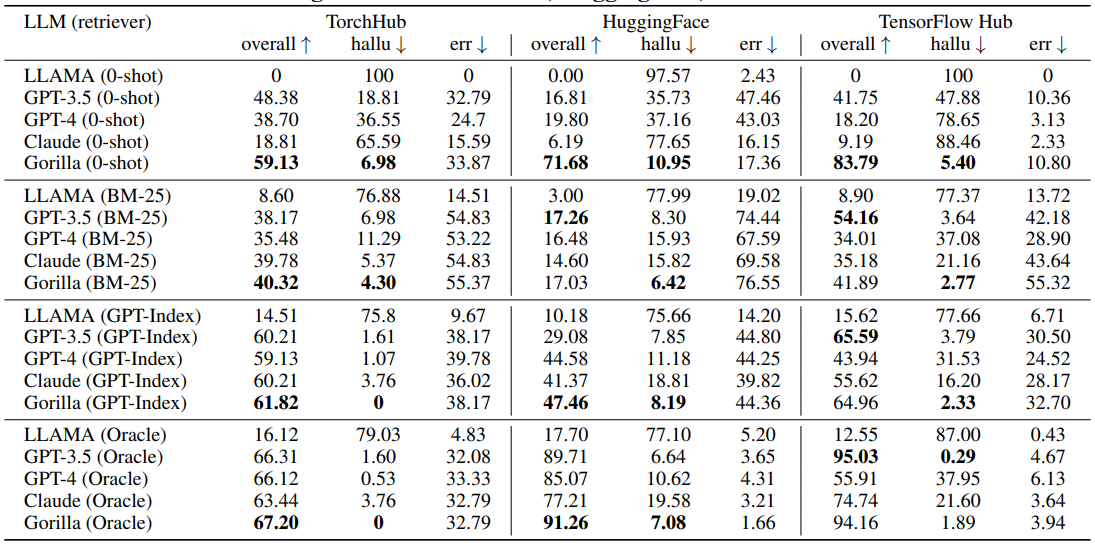

3-1. AST Accuracy on API call

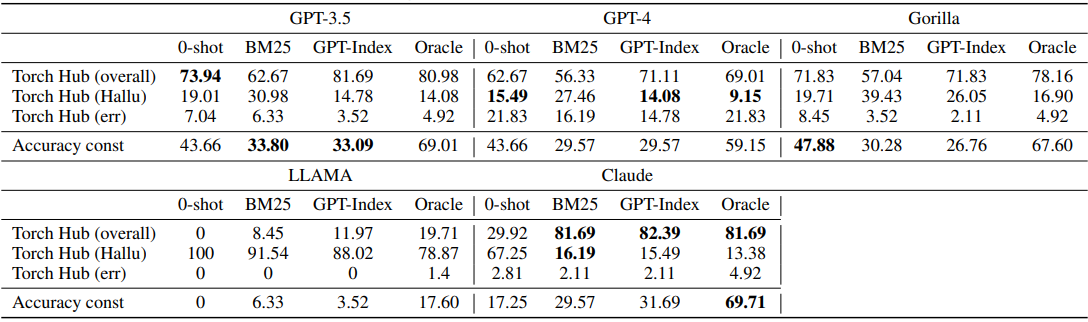

논문에서는 서로 다른 모델에 대한 AST의 정확도의 결과를 보여준다. 결과는 표 1에 나타나 있다. 논문에서는 각 모델을 서로 다른 retriever 세팅에 대해 평가하였다.

Finetuning without Retrieval. 표 1에서는 약하게 fine-tune 된 Gorilla가 zero-shot에서 SoTA 성능을 달성하였다. 최소한 이 범위에서는 retrieval보다 fine-tuning이 더 낫다는 것을 보여준다.

게다가, ground-truth retriever는 성능을 살짝 떨어뜨렸으나, BM25 또는 GPT-Index를 retriever로 사용하면 성능은 상당히 떨어졌다. 이러한 결과는 비최적 retriever를 test 시에 추가하는 것은 모델을 잘못 지도하고 더 많은 에러를 낳는다는 결과를 보여준다.

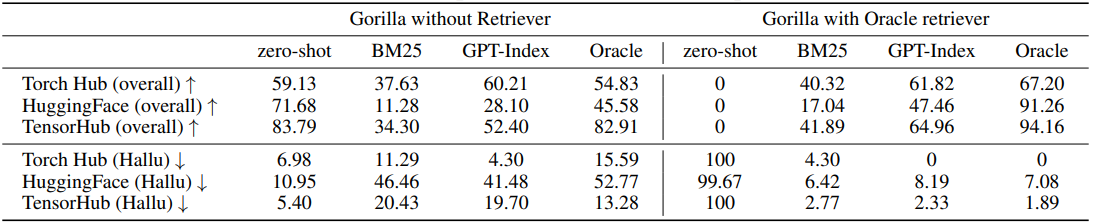

Finetuning with Retrieval. 논문에서는 retriever와 함께 LM을 fine-tune 하는 것이 성능에 어떤 도움을 주는지를 논의하였다. 이 실험을 위해 base LLaMA를 prompt, 참조 API 문서, GPT-4에 의해 생성된 example로 fine-tune 하였다. 표 2에서 fine-tuning pipeline에 ground-truth retriever를 함께 사용한 결과, retriever이 없는 것보다 상당히 나은 결과를 보여줬다. 하지만 평가 시에 확인한 결과 현재의 retriever는 ground-truth retriever와 큰 갭을 가지고 있었다. 그럼에도 불구하고, 더 나은 retriever로 fine-tune 하는 것이 아직 더 나은 method라는 결론을 내릴 수 있었다.

Hallucination with LLM. 논문에서 관찰한 한 가지 현상은 API를 호출하기 위해 LLM과 함께 zero-shot prompting을 하면 심각한 hallucination을 낳게 된다는 것이다. 놀랍게도, 논문에서는 또한 GPT-3.5가 GPT-4 보다 적은 hallucination을 보여준다는 것을 발견하였다. 이것은 RLHF가 모델을 진실하게 만드는데 중심적 역할을 한다는 것을 암시한다.

3-2. Test-time Documentation Change

빠르게 진화하는 API 문서화의 환경은 LLM의 재학습 또는 fine-tuning 스케줄을 앞질러 가기도 한다. 이러한 업데이트 빈도의 미스매치는 LLM의 활용성과 신뢰성을 줄어들게 이끌 수도 있다. 하지만, Gorilla의 retriever-aware training의 소개로, API 문서화의 변화에 즉시 적응할 수 있기 때문이다. 이 새로운 방식은 모델이 최신 및 적절하게 머물러 있을 수 있도록 허락해 준다.

예를 들어 그림 6에 묘사된 시나리오를 생각해 보면, 여기서 Gorilla의 training은 API의 변화에 효과적으로 반응하도록 허락해 준다. 이 능력은 기반 모델과 시스템이 업그레이드와 개선을 겪어도 LLM이 적절하고 정확하도록 보장해 준다. 이는 조직이 시간이 지남에 따라 선호하는 모델 레지스트리를 변경할 수 있으므로 API 소스의 변화에 적응하는 모델의 기능을 반영한다.

요약하면, API 문서화의 test-time 변화에 적응하기 위한 Gorilla의 능력은 다양한 이점을 제공해 주고, 시간이 지남에도 정확도 & 연관성을 유지시켜 준다. 그리고 API 문서 업데이트의 빠른 속도에도 적응해서 기반 모델과 시스템에서의 수정도 조정할 수 있다. 이것은 모델을 API 호출을 위한 robust 하고 신뢰도 있는 tool로 만들어 준다.

3-3. API Call with Constraints

제약을 이해하는 LM의 능력에 초점을 맞춰서 평가하였다. 그 결과는 다음의 표 3과 같다.

결과를 살펴보면 제약이 추가되면, retriever이 있든 없든 모든 모델에 걸쳐서 정확도는 떨어진다. Gorilla는 retrieval을 사용할 때 GPT-3.5와 맞먹는 성능을 달성할 수 있었다. 그리고 zero-shot의 경우에는 최고의 성능을 달성하였다. 이것은 서로 다른 제약 간에 trade-off를 고려하면서 API를 다루기 위한 Gorilla의 능력을 강조한다.

출처

https://arxiv.org/abs/2305.15334

Gorilla: Large Language Model Connected with Massive APIs

Large Language Models (LLMs) have seen an impressive wave of advances recently, with models now excelling in a variety of tasks, such as mathematical reasoning and program synthesis. However, their potential to effectively use tools via API calls remains u

arxiv.org