Noise makes LLM better! - NEFTune 😉

What is the big difference of NLP compared to CV? 😮

이 포스팅의 제목부터 해서 의아한 부분이 한두 가지가 아닐 것이다. 갑자기 뒤돌아봐야 한다느니 CV와 NLP의 가장 큰 차이점이 무엇인지에 대해 묻지를 않나. 하지만 이번 포스팅에서 말하고자 하는 내용을 위해서는 이 차이점을 되짚어보아야 할 필요가 있다! 그렇다면 먼저 독자분들께 질문해 보도록 하겠다. NLP과 CV의 가장 큰 차이점은 무엇일까? 아마도 이렇게 추상적으로 질문한다면 다음과 같은 답변들이 나올 것이라 생각한다. 😁

- 사용되는 데이터가 다름. (text & image)

- 사용되는 모델들의 차이

- 학습 방식의 차이

물론 위와 같은 답변들도 맞지만, 필자가 본 포스팅에서 말하고자 하는 두 연구계의 가장 큰 차이점은 "regularization에 대한 연구" 라고 생각한다. 약간 질문이 허술했던 점에 대해서는 양해를 부탁드립니다. 😅 그렇다면 이게 무슨 말일까? 갑자기 regularization에 대한 연구에 대해서 얘기를 하다니?

우선 regularization에 대해 얘기하기 전에 각 연구계의 연구 동향들에 대해서 간략하게 살펴보도록 하겠다.

- Computer VIsion: regularization과 overfitting에 대한 연구들이 활발하게 이루어짐.

- Natural Language Processing: new & high-quality data를 활용해서 모델을 학습시켜 성능 개선을 이루고자 하는 연구가 주로 이루어짐.

이것만 봐도 알 수 있듯이 CV에서는 regularization과 overfitting에 대해서 활발하게 연구들이 이어지고 있는 반면, NLP는 아직은 새롭고 더욱 퀄리티가 좋은 데이터를 활용해서 모델을 학습시켜서 모델의 성능을 향상시키고자 하는 연구들이 주로 이루어지고 있다. 아 물론 prompting, fine-tuning, RLHF 등의 연구들도 함께 활발하게 이어지고 있다. 하지만, CV에 비해서 NLP 분야에서는 아직 regularization과 overfitting에 대해서는 충분한 연구가 이루어지고 있지 않다.

본 포스팅에서는 이러한 NLP 분야의 허점을 파고든 논문인 "NEFTune: Noisy Embedding Improve Instruction Finetuning(Jain et al. 2023)"에 대해서 소개해보고자 한다! 🤗

NEFTune, the new paradigm of model training ✨

Introduction

이 포스팅을 작성하는 시점에서 불과 일주일 정도 전에 공개된 따끈따끈한 논문인 "NEFTune: Noisy Embedding Improve Instruction Finetuning"에서는 기존의 fine-tuning에 매우 간단한 trick을 사용함으로써 기존의 fine-tuning보다 훨씬 더 성능을 향상시킬 수 있다고 주장한다. 실제로 NEFTune의 실험 결과를 살펴보면 NEFTune이 충분히 매력적인 training 방식처럼 보인다.

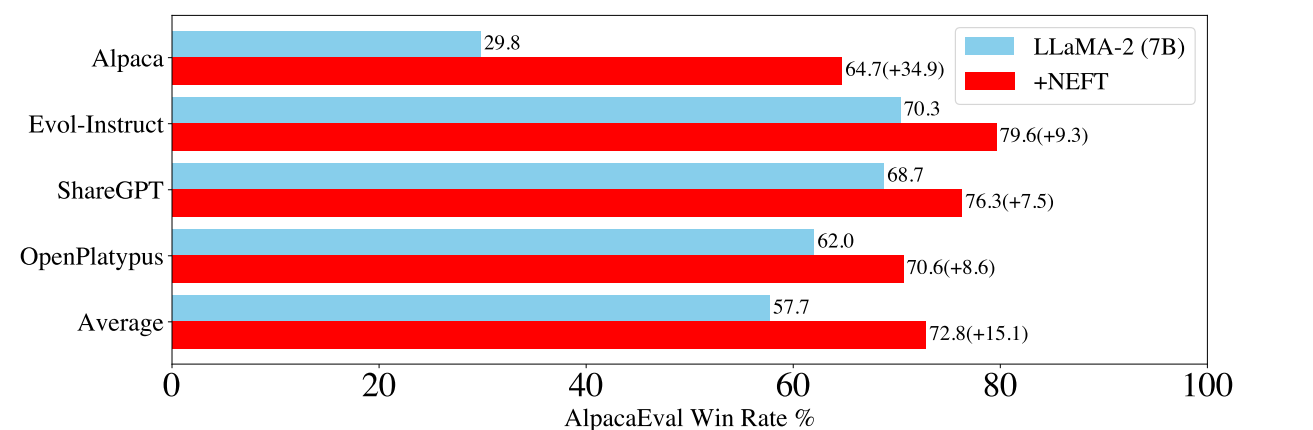

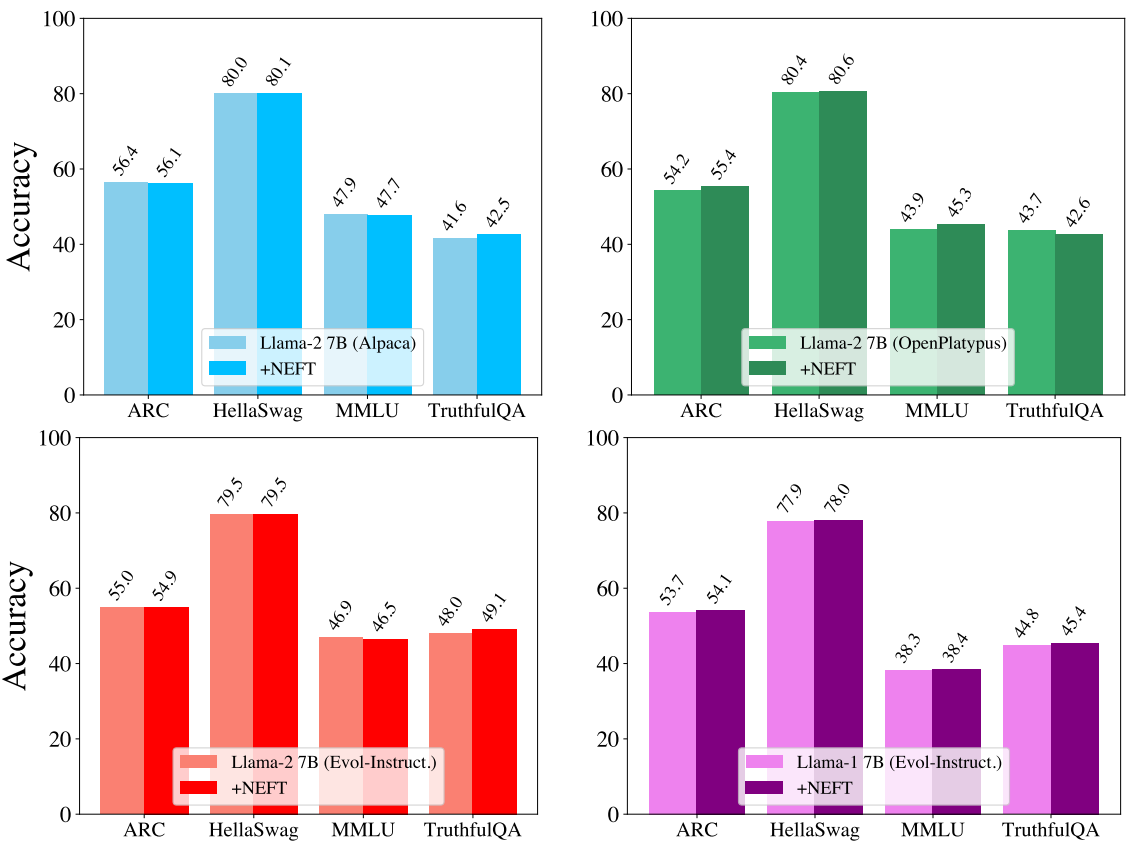

위의 그래프를 보면 알 수 있듯이 얼핏 보기에도 NEFTune은 standard fine-tuning보다 훨씬 더 개선된 성능을 보여줄 수 있다는 것을 확인할 수 있다. 그렇다면 NEFTune은 어떤 방법을 사용하였기에 간단한 방법으로 이렇게 효과적으로 성능을 개선시킬 수 있었던 것일까?

NEFTune

기존의 instruction-tuned model들을 살펴보면 보통 instruction & response 쌍으로 구성되어 있는 데이터셋에서 학습된다. NEFTune도 이들과 마찬가지로 각 스텝은 데이터셋으로부터 instruction을 샘플링하고, 이 토큰을 임베딩 벡터로 변환하는 과정을 통해 시작된다. 그다음에 NEFTune은 임베딩에 random noise vector를 추가함으로써 standard training을 시작한다. 이게 NEFTune의 모든 것이다! 너무 간단해서 의심이 들 정도인데 실제로 논문에서도 NEFTune method에 대한 설명은 불과 9줄 정도에 불과할 정도로 매우 간단한 method이다. 이 간단한 방법으로 NEFTune은 standard fine-tuning을 압도하는 성능을 얻을 수 있었던 것이다!

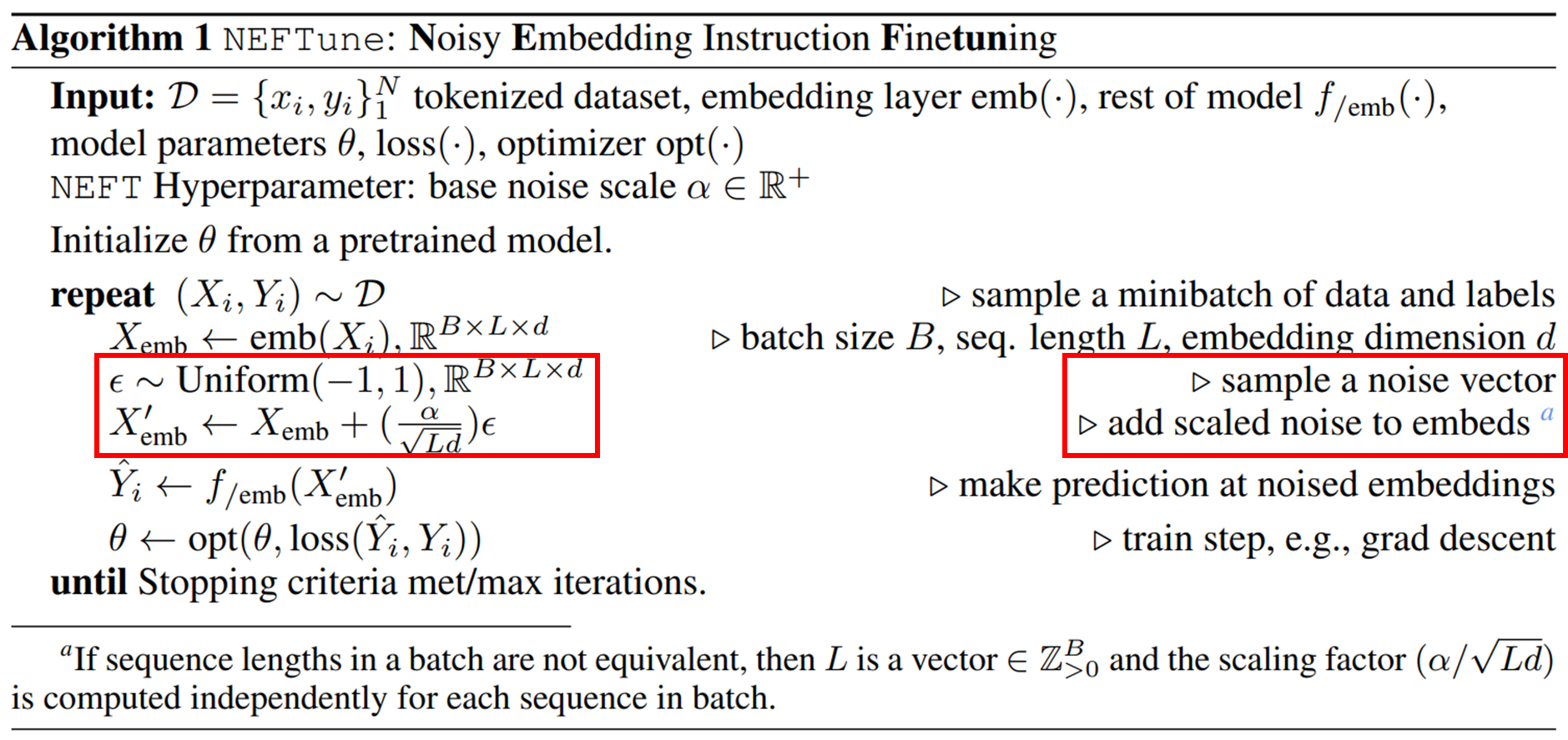

NEFTune의 알고리즘을 살펴보면 standard fine-tuning에 비해서 NEFTune에서 추가된 점은 다음과 같다.

- $\epsilon \sim Uniform(-1, 1), \mathbb{R}^{B \times L \times d}$ : noise vector를 독립항등분포(iid)에서 균일하게 샘플링

- $(\frac {\alpha}{\sqrt{Ld}}) \epsilon$ : factor를 사용해서 noise vector를 scaling

- ${X}'_{emb} \leftarrow X_{emb} + (\frac {\alpha}{\sqrt{Ld}}) \epsilon$ : original embedding에 scaled noise vector를 합침

이러한 NEFTune의 구조를 코드로 나타내면 다음과 같다.

def noised_embed(orig_embed, noise_alpha):

embed_init = orig_embed(x)

dims = torch.tensor(embed_init.size(1) * embed_init.size(2))

mag_norm = noise_alpha/torch.sqrt(dims)

return embed_init + torch.zeros_like(embed_init).uniform_(-mag_norm, mag_norm)

NEFTune은 이름 그대로 Noisy Embedding Fine-tuning인 것이다. 😄 NEFTune의 작동 방식을 보면 무언가 떠오르는 것이 하나 있지 않은가? 바로 Computer Vision에서 모델을 학습시킬 때 자주 사용되었던 Noise Injection과 상당히 유사하다고 필자는 생각한다. 생각해 보면 이 noise injection을 이미지가 아닌 텍스트 임베딩에 적용한 것이 NEFTune이라고 볼 수 있다! Computer Vision에서 noise injection은 모델에게 robust함을 줄 뿐만 아니라 성능 개선도 이끌 수 있었는데 NLP에서는 이 noisy embedding이 모델의 성능에 어떻게 영향을 미칠 수 있을까?

Striking performance of NEFTune 🔥

NEFTune은 앞서도 말했듯이 상당히 간단한 method이다. 그렇다면 이 간단한 NEFTune이 성능에 미치는 영향은 얼마나 크고 어떤 영향을 미칠 수 있을까? 논문에서 보여준 실험 결과들을 하나하나 살펴보도록 하자!

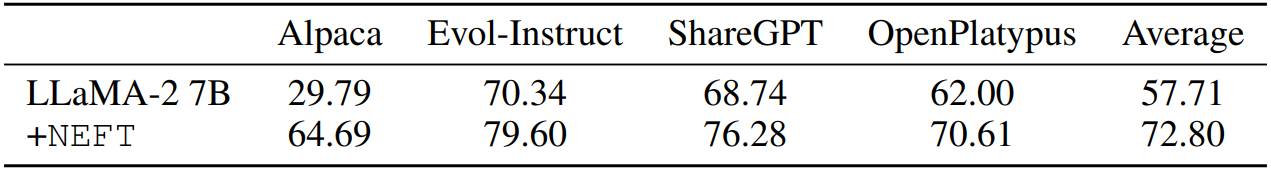

- NEFTune은 Text Quality를 개선시킴⬆️ 아래의 표를 보면 알 수 있듯이 NEFTune을 사용해서 모델을 fine-tuning하면 모델의 conversational ability & answer quality를 상당히 개선시킨다는 것을 확인할 수 있다.

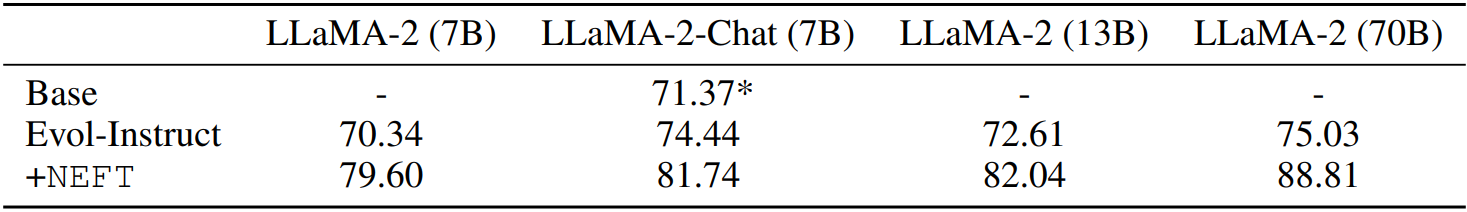

- NEFTune은 Chat Model도 개선시킬 수 있음🗣️⬆️ Llama-2-Chat과 같은 RLHF를 통해 fine-tune된 Chat model에 대해서도 WizardLM의 Evol-Instruct에서 추가적으로 학습시켰을 때 3% 정도의 성능 개선을 일으킬 수 있다는 것을 확인할 수 있었다. 그리고 NEFTune을 사용해서 fine-tuning을 하면 무려 10% 정도 더 개선된 결과를 얻었다! 하지만 이렇게 학습된 모델의 일부 기능은 유해한 동작 출력을 억제하는 기능과 같은 영향을 받을 수 있다.

- Benchmark에서의 성능 유지🟰 NEFTune을 사용했을 때 확실히 모델의 conversational ability를 개선시킬 수 있다는 것은 확인하였으나 conversational ability 외에도 benchmark 성능을 유지하는 것 또한 LLM의 상당히 중요한 과제이다. 따라서 논문에서는 HuggingFace의 Open LLM Leaderboard의 평가영역인 ARC, HellaSwag, MMLU, TruthfulQA에 대해서도 평가를 진행하였다. 하지만 다음의 그래프를 보면 알 수 있듯이 NEFTune은 Benchmark에서도 모델의 성능을 하락시키지 않고 보존할 수 있다는 것을 확인할 수 있다!

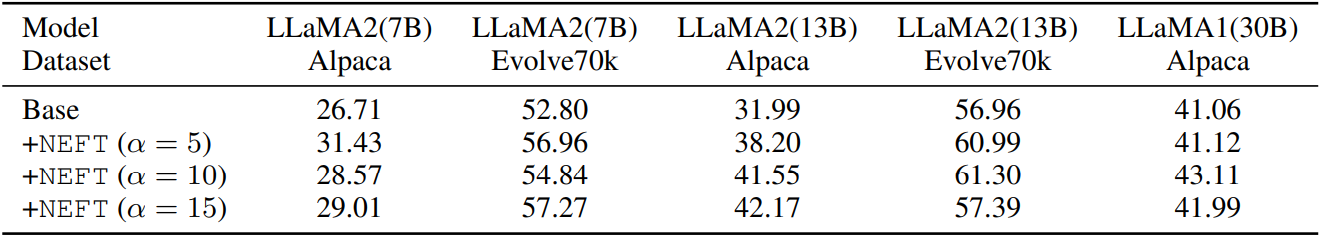

- NEFTune은 QLoRA에서도 작동함😮 NEFTune이 확실히 standard fine-tuning에 비해서 성능을 개선시킬 수 있다는 것은 확인하였고, 그렇다면 QLoRA와 같은 Parameter Efficient Fine-tuning에서도 NEFTune은 효과적일까? 그렇다!! 물론 그 효과가 full-finetuning에 비해서는 소소하지만 확실히 성능 향상을 가져다준다.

- NEFTune은 더욱 디테일한 response를 제공📚 정확한 비교를 위해 NEFTune의 response와 standard fine-tuning의 responser를 비교한 결과 NEFTune의 response가 standard fine-tuning의 response에 비해 더욱 구체적이고 디테일한 정보를 주고, 추가적인 정보를 더 준다는 것을 확인할 수 있었다. 자세한 내용은 논문의 Appendix를 확인해 볼 수 있길 바란다!

이와 같이 NEFTune을 통한 성능 향상 효과를 확인할 수 있었다. 그런데 여기서 드는 의문점이 하나 있다. 도대체 어떤 점이 이 간단한 NEFTune method를 여러 모델에서 성능 개선을 일으킬 수 있는 강력한 method로 만든 것일까? 분명 NEFTune에서 한 것이라고는 고작 original embedding에 noise를 추가한 것 외에는 한 것이 없는데, 이것이 NEFTune을 효과적인 method로 만들어준 것일까?

What makes NEFTune effective? 🤔

앞선 섹션의 말미에 던진 질문을 이번 섹션에서 밝혀보도록 하겠다! 논문에서는 NEFTune의 성능 향상 효과가 앞서 세웠던 가설처럼 임베딩에 noise를 추가하는 것으로부터 온다고 가설을 세웠다. 이 noise를 통해서 모델이 얻을 수 있는 이점은 다음과 같다.

- Overfitting ⬇️: noise data를 추가함으로써 모델은 instruction dataset, formatting detail, exacting word, text length에 대한 overfitting을 줄일 수 있음.

- Pre-trained modeld의 지식을 더욱 활용 가능 🔥: noise data를 추가함으로써 모델이 특정 데이터 분포에만 머무르는 것이 아니라 더 다양한 분포의 데이터를 활용함으로써 더욱 high-quality의 response를 줄 수 있음.

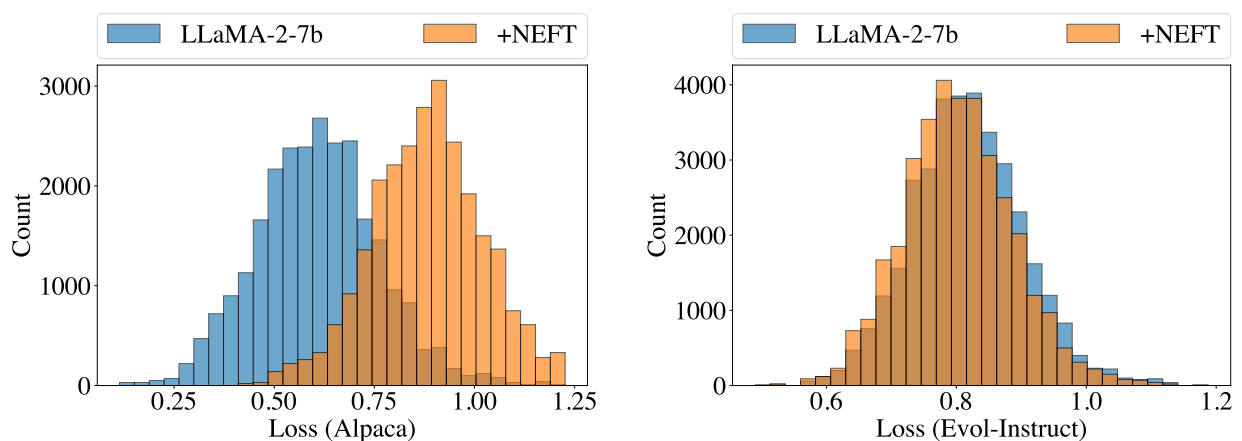

논문에서는 NEFTune이 standard fine-tuning보다 덜 overfit 되었기 때문에 더 개선된 성능을 나을 수 있게 되었다는 가설을 세우고, 실험을 통해 이를 증명하고자 하였다. 이를 위해 논문에서는 모델의 training loss와 test loss를 다음의 그래프와 같이 비교하였다.

위 그래프를 보면 알 수 있듯이 NEFTune은 training loss에서 standard fine-tuning보다 높은 모습을 보여주지만, test loss에서는 근소하게 standard fine-tuning보다 낮은 loss를 보여준다. 이것으로 미루어보아 확실히 NEFTune은 standard fine-tuning에 비해서 덜 overfit한다는 것을 알 수 있다.

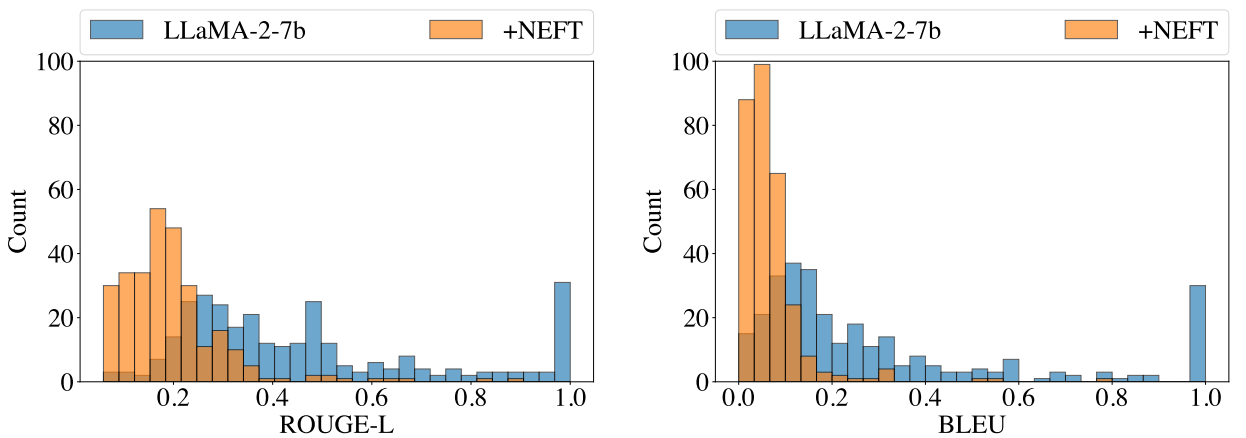

또한 model의 response와 ground-truth answer과의 유사도 비교를 위해 ROUGE-L과 BLEU를 사용해서 평가해 본 결과, NEFTune은 standard fine-tuning에 비해서 훨씬 더 작은 ROUGE-L & BLEU score를 가진다는 것을 확인할 수 있었다. 따라서 ground-truth answer과도 크게 다른 response를 생성한다는 것으로 미루어보아 확실히 NEFTune은 덜 overfit 되었다는 것을 확인할 수 있다.

NEFTune with HuggingFace TRL

포스팅을 올리는 일자인 10/18 하루 전인 10/17에 HuggingFace의 TRL 팀에서 NEFTune을 TRL의 SFTTrainer에 사용할 수 있도록 업데이트를 하였다고 한다! (참고: https://www.linkedin.com/feed/update/urn:li:activity:7120085541861085185/) 이제 NEFTune을 모델의 복잡한 구조를 건드릴 필요 없이 딱 한 줄의 코드를 SFTTrainer의 arg에 추가하는 것으로 NEFTune을 구현할 수 있다고 한다! NEFTune을 활용해서 fine-tuning을 해보고자 하는 독자가 있다면 참고하길 바란다.

What should NLP do in the future? 🧐

이렇게 해서 간단하지만 무시 못 할 정도의 성능 개선을 보여준 NEFTune에 대해서 알아보았다. 다시 한 번 이런 엄청난 연구를 한 연구자분들께 감사드립니다.. 하고 평소라면 포스팅을 끝냈겠지만, 이번 포스팅에서는 좀 더 심오한 얘기를 나눠보고자 한다. 우선 논문의 Conclusion에 적혀 있는 글귀를 빌려서 말해 보고자 한다.

"Unlike the computer vision, which has studied regularization and overfitting for years,

the LLM community tends to use standardized training loops that are designed for optimizer stability and generalization."

NLP를 공부하는 사람들이라면 위 글귀에 대해서 몹시 공감할 것이라고 생각한다. 일단 필자는 위의 글이 현재 NLP의 연구 동향에 대해서 일침을 날리는 말이라고 생각한다. 현재 NLP 연구 추세를 살펴보면 basic 한 연구들이 이뤄지지 않은 채 그 위에 많은 연구들이 쌓여가고 있다. 불과 overfitting에 대해서도 크게 생각하지 않고 모델을 학습시켜 나가고 있으니 말이다! 물론 지금의 연구들도 정말 놀랍고 새로운 발견들을 이어나가고 있지만, 그만큼 basic 한 연구들에도 관심을 쏟아야 한다고 생각한다. 그런 의미에서 이 NEFTune은 앞으로의 NLP 연구 동향에 큰 영향을 미칠 연구라고 생각한다. 😊

이 포스팅을 읽은 독자들은 필자와는 다른 생각을 가지고 있을 수도 있겠지만, 필자는 많은 application study들도 좋지만, 기본적인 하나의 basic한 study가 정말 중요하다고 생각한다. 앞으로의 NLP 연구 동향이 이러한 방향으로 나아갈 수 있길 바라며 포스팅을 마쳐보고자 한다.