The overview of this paper

BERT와 RoBERTa는 semantic textual simialrity$($STS$)$ 같은 문장 쌍 회귀 task에 대해서 새로운 SoTA performance를 달성하였다. 하지만 이러한 task는 두 문장이 네트워크에 입력되어야 하므로 상당한 computational overhead를 발생시킨다. BERT를 사용하여 10,000개 문장의 모음에서 가장 비슷한 짝을 찾는 것은 5,000만 번의 추론 계산이 필요하다. 이러한 BERT의 구조는 semantic similarity search 뿐만 아니라 clustering 같은 unsupervised task에 대해서는 부적합하다.

논문에서는 simase & triplet network를 사용해서 cosine-similarity를 사용하는 것과 비교할 수 있는 의미상으로 의미 있는 sentence embedding을 얻는 pre-train BERT network에 수정을 가한 Sentence-BERT$($SBERT$)$를 선보였다. 이 SBERT는 BERT와 RoBERTa가 가장 비슷한 문장을 찾는데 65시간이 걸리는데 비해 겨우 5초의 시간만이 걸렸다!! 그 와중에도 BERT의 성능은 유지하는 모습을 보여줬다.

논문에서는 SBERT와 SRoBERTa를 일반적인 STS task와 전이 학습 task에 대해서 평가하였는데, 다른 SoTA sentence embedding method를 능가하는 모습을 보여줬다.

Table of Contents

1. Instroduction

2. Model

2-1. Training Details

3. Evaluation - Semantic Textual Similarity

4. Evaluation - SentEval

5. Computational Efficiency

1. Introduction

논문에서는 siamese & triplet network를 BERT network에 적용해서 의미상으로 의미 있는 sentence embedding을 얻어낼 수 있는 Sentence-BERT를 소개하였다. 이 SBERT는 지금까지도 BERT가 적용될 수 없었던 분야인 특정의 새로운 task에 대해 사용 가능하게 만들었다. 이러한 task에는 큰 규모의 의미 유사도 비교, clustering, semantic search를 통한 정보 검색이 있다.

BERT는 여러 문장 분류 및 문장 쌍 회귀 task에서 새로운 SoTA performance를 보여줬다. BERT는 cross-encoder 구조를 사용한다: 두 개의 문장이 transformer network로 들어가고 target 값이 예측된다. 하지만, 이러한 셋업은 너무나 많은 가능한 계산량 때문에 다양한 쌍 회귀 task에 대해서는 부적합하다.

clustering과 semantic search를 해결하기 위한 가장 일반적인 방법은 각 문장을 비슷한 문장끼리 같은 벡터 공간으로 매핑하는 것으로 해결할 수 있다. 그래서 연구자들은 각각의 문장을 BERT에 입력으로 넣기 시작해서 고정된 크기의 sentence embedding을 얻어낼 수 있었다. 가장 흔하게 사용되는 방법은 BERT의 출력 레이어를 평균내거나 첫 번째 토큰$($CLS token$)$의 출력을 사용하는 것이다. 실험에서도 밝힐 거지만 이러한 일반적인 방법들은 sentence embedding보다 안 좋은 결과를 보여주는데 GloVe embedding보다도 안 좋은 결과를 보여준다.

이러한 문제점을 완화하기 위해 SBERT가 개발되었다. siamese network architecture는 입력 문장으로 고정된 크기의 벡터가 얻어질 수 있도록 해주었다. 코사인 유사도 또는 Manhatten, Euclidean distance 같은 유사도 측정 방법을 사용하여 의미상으로 유사한 문장을 찾을 수 있었다. 이러한 유사도 측정은 현대의 하드웨어에서 극도로 효율적으로 수행될 수 있다. 이는 SBERT가 semantic similarity search 뿐만 아니라 clustering에도 사용될 수 있도록 해주었다. 10,000개의 문장 모음에서 가장 비슷한 문장 쌍을 찾는 task는 BERT를 사용했을 때 65시간이 걸렸지만, SBERT를 사용할 때는 겨우 5초 정도의 시간이 걸렸고, 코사인 유사도를 계산하는 데는 0.01초 정도의 시간이 걸렸다. 최적화된 인덱스 구조를 사용함으로써 가장 비슷한 Quora 질문을 찾는 task는 50시간에서 몇 밀리초로 줄어들게 되었다.

논문에서는 SBERT를 NLI dataset에서 fine-tune 하였는데, 이것은 기존의 SoTA setence embedding 이었던 InferSent와 Universal Sentence Encoder를 상당히 능가하는 sentence embedding을 생성한다. SBERT는 특정 task에 대해서 적응할 수도 있다. SBERT는 어려운 요소 유사도 데이터셋과 서로 다른 Wikipedia 문서에서 나온 문장을 구분하는 triplet 데이터셋에서 새로운 SoTA performance를 달성하였다.

2. Model

SBERT는 고정된 크기의 sentence embedding을 얻기 위해 BERT와 RoBERTa의 출력에 pooling 연산을 가하였다. pooling 연산으로는 다음의 3가지를 사용하여 실험을 진행하였다.

- CLS-토큰의 출력

- 모든 출력 벡터의 평균 계산$($MEAN-Strategy$)$

- 출력 벡터의 최대 시간 계산$($MAX-Strategy$)$

BERT와 RoBERTa를 fine-tune하기 위해 의미상으로 의미 있고 코사인 유사도와 비교될 수 있는 생성된 sentence embedding 같은 가중치를 업데이트하기 위한 simase & triplet network를 만들었다. 네트워크 구조는 training data에 따라서 변한다. 다음의 구조와 objective function을 사용하여 실험이 진행되었다.

Classification Objective Function. sentence embedding $u$와 $v$, element-wise 차이 $|u-v|$를 합치고 학습 가능 가중치 $W_{t} \in \mathbb{R}^{3n \times k}$와 곱하였다.

$o = softmax(W_{t}(u, v, |u-v|))$

여기서 $n$은 sentence embedding의 차원이고, $k$는 라벨의 수이다. 이 경우에는 cross-entropy loss를 최적화하였다. 이 구조는 다음의 그림 1에 묘사되어 있다.

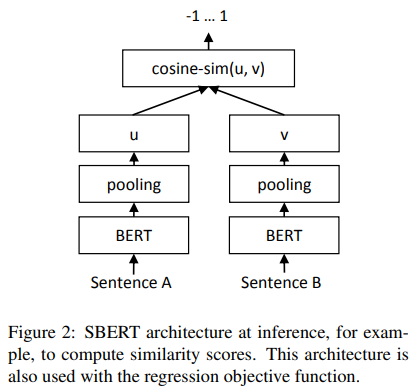

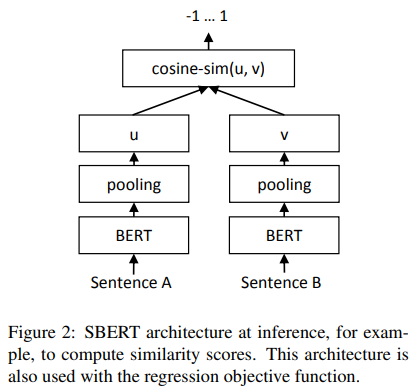

Regression Objective Function. 두 sentence embedding $u$와 $v$ 간의 코사인 유사도가 계산된다$($그림 2.$)$. 평균 제곱 오차가 objective function으로 사용된다.

Triplet Objective Function. anchor 문장 $a$, 긍정 문장 $p$, 부정 문장 $n$이 주어지면 triplet loss는 네트워크가 $a$와 $p$ 간의 거리가 $a$와 $n$의 거리보다 작도록 학습한다. 수학적으로 다음의 손실 함수를 최소화하였다.

$max(||s_{a} - s_{p}|| - ||s_{a} - s_{n}|| + \epsilon, 0)$

$s_{x}$ 각각의 sentence embedding $a/n/p$와 거리 계산 공식 $||\cdot||$, 그리고 마진 $\epsilon$을 사용하였다. 마진 $\epsilon$은 $s_{p}$가 $s_{n}$ 보다 $s_{a}$에 최소한 $\epsilon$ 더 가깝게 있다는 것을 보장해준다. Euclidean distance를 사용하는 것처럼 $\epsilon = 1$로 설정하였다.

2-1. Training Details

논문에서는 SBERT를 SNLI와 Multi-Genre NLI 데이터셋의 조합에서 학습시켰다. 그리고 SBERT를 한 에폭에서 3가지 방법의 softmax 분류를 사용하여 fine-tune 하였다. 배치 크기로는 16, Adam optimizer로는 학습률 2e-5, 선형 학습률 warm-up은 학습 데이터의 10%로 하였다. 그리고 기본 pooling strategy는 MEAN이었다.

3. Evaluation - Semantic Textual Simialrity

논문에서는 SBERT의 성능을 STS task에 대해서 평가하였다. SoTA method는 종종 setence embedding을 similarity score에 매필하는 회귀 함수를 학습한다. 그러나 이러한 회귀 함수는 쌍으로 작동하며 조합 폭발로 인해 문장 모음이 특정 크기에 도달하면 확장할 수 없는 경우가 많다. 그 대신에, 두 sentence embedding 간의 유사도와 코사인 유사도를 항상 비교하였다. 그리고 또한 negative Manhatten과 Euclidean distance을 사용하여 실험을 진행하였는데 모든 방법의 결과 비슷하게 나타났다.

3-1. Unsupervised STS

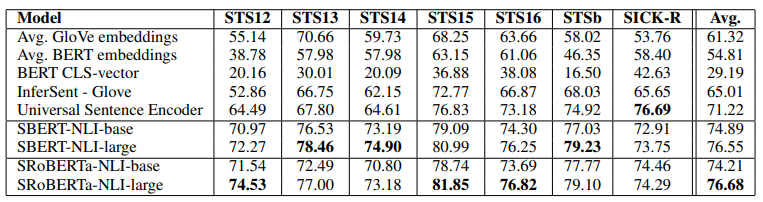

논문에서는 STS 특정 학습 데이터를 사용하지 않고 STS에 대한 SBERT의 성능을 평가하였다. 피어슨 상관 계수 면에서는 STS가 잘 맞지 않음을 보여줬다. 그 대신에, sentence embedding과 gold label의 코사인 유사도 간의 Spearman's rank 상관도를 계산하였다. 이에 대한 결과는 다음의 표 1에 나타나 있다.

설명된 siamese network 구조와 fine-tuning 메커니즘을 사용하는 것은 상관도를 크게 향상시켜주고 InferSent와 Universal Sentence Encoder를 크게 능가하는 성능을 보여줬다. RoBERTa는 여러가지 supervised task에 대해서 성능을 향상시킬 수 있지만, sentence embedding을 생성하는 task에 대해서는 SBERT와 SRoBERTa가 사소한 차이를 보여줬다.

3-2. Supervised STS

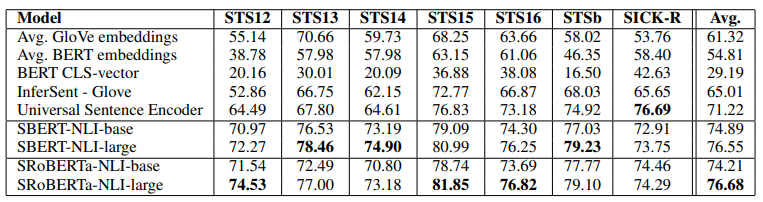

STS 벤치마크는 supervised STS 시스템을 평가하는 유명한 데이터셋이다. BERT는 두 개의 문장을 네트워크에 흘려보내고 출력에 간단한 회귀 method를 사용함으로써 새로운 SoTA performance를 달성하였다. 논문에서는 training set를 사용해서 SBERT를 fine-tune 하였는데, 이때 regression objective function을 사용하였다. 예측을 할 때, sentence embedding 간의 코사인 유사도를 계산하였다. 모든 시스템들은 variance를 해결하기 위해 10개의 랜덤한 시드에서 학습되었다. 이에 대한 결과는 다음의 표 2에 나타나 있다.

4. Evaluation - SentEval

SentEval은 sentence embedding의 퀄리티를 평가하기 위한 툴킷이다. sentence embedding은 logistic regression 분류기를 위한 feature로 사용된다. logistic regression 분류기는 10-fold cross-validation 셋업에서 다양한 task에서 학습되었고 예측 정화도는 test-fold에 대해서 학습되었다.

SBERT sentence embedding의 목적은 다른 task를 위해 전이 학습으로 사용되지 않는 것이다. 논문에서는 기존 BERT 논문에서 처럼 새로운 task에 대해 BERT를 fine-tuning 하는 것은 BERT 네트워크의 모든 레이어를 업데이트하는 더욱 적합한 method이다. 하지만 SentEval은 다양한 task에 대해 sentence embedding의 퀄리티에 인상을 주고 있다.

논문에서는 SBERT sentence embedding을 7가지의 SentEval transfer task에 대해서 비교하였다. 결과는 다음의 표 3에 나타나 있다.

평균 BERT embedding 또는 BERT network로부터 나온 CLS-토큰을 사용하는 것은 다양한 STS task에 대해서 안 좋은 결과를 달성하고, 평균 GloVe embedding보다 떨어지는 성능을 보여준다. 하지만, SentEval에 대해서 평균 BERT embedding과 BERT CLS-토큰 출력은 평균 GloVe embedding을 넘어서는 괜찮은 결과를 보여줬다. 이에 대한 이유는 서로 다른 셋업 덕분이다. STS task에 대해서 코사인 유사도를 사용하여 sentence embedding 간의 유사도를 측정하려 했다. 코사인 유사도는 모든 차원을 동등하게 다룬다. 반대로, SentEval은 logistic regression 분류기를 sentence embedding에 적용하였다. 이것은 특정 차원이 분류 결과 크거나 작은 영향을 가질 수 있도록 하였다.

5. Computational Efficiency

sentence embedding은 잠재적으로 몇백만 개의 문장을 계산해야할 필요가 있다. 따라서 빠른 계산 속도가 요구된다. 이 섹션에서는 SBERT와 평균 GloVe embedding, InferSent, Universal Sentenve Encoder을 비교하였다. 그에 대한 결과는 다음의 표 4와 같다.

CPU에서는 InferSent가 SBERT보다 더 빠른 속도를 보여줬다. 이는 더욱 간단한 네트워크 architecture 때문이다. InferSent는 하나의 Bi-LSTM 레이어를 사용하는 반면에 BERT는 12개의 쌓여있는 transformer 레이어를 사용한다. 하지만, transformer 네트워크의 계산적 장점은 GPU에서 드러난다. 여기서 smart batching을 사용한 SBERT는 가장 빠른 속도를 보여줬다.

출처

https://arxiv.org/abs/1908.10084

Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks

BERT (Devlin et al., 2018) and RoBERTa (Liu et al., 2019) has set a new state-of-the-art performance on sentence-pair regression tasks like semantic textual similarity (STS). However, it requires that both sentences are fed into the network, which causes a

arxiv.org

'Paper Reading 📜 > Natural Language Processing' 카테고리의 다른 글

The overview of this paper

BERT와 RoBERTa는 semantic textual simialrity((STS)) 같은 문장 쌍 회귀 task에 대해서 새로운 SoTA performance를 달성하였다. 하지만 이러한 task는 두 문장이 네트워크에 입력되어야 하므로 상당한 computational overhead를 발생시킨다. BERT를 사용하여 10,000개 문장의 모음에서 가장 비슷한 짝을 찾는 것은 5,000만 번의 추론 계산이 필요하다. 이러한 BERT의 구조는 semantic similarity search 뿐만 아니라 clustering 같은 unsupervised task에 대해서는 부적합하다.

논문에서는 simase & triplet network를 사용해서 cosine-similarity를 사용하는 것과 비교할 수 있는 의미상으로 의미 있는 sentence embedding을 얻는 pre-train BERT network에 수정을 가한 Sentence-BERT((SBERT))를 선보였다. 이 SBERT는 BERT와 RoBERTa가 가장 비슷한 문장을 찾는데 65시간이 걸리는데 비해 겨우 5초의 시간만이 걸렸다!! 그 와중에도 BERT의 성능은 유지하는 모습을 보여줬다.

논문에서는 SBERT와 SRoBERTa를 일반적인 STS task와 전이 학습 task에 대해서 평가하였는데, 다른 SoTA sentence embedding method를 능가하는 모습을 보여줬다.

Table of Contents

1. Instroduction

2. Model

2-1. Training Details

3. Evaluation - Semantic Textual Similarity

4. Evaluation - SentEval

5. Computational Efficiency

1. Introduction

논문에서는 siamese & triplet network를 BERT network에 적용해서 의미상으로 의미 있는 sentence embedding을 얻어낼 수 있는 Sentence-BERT를 소개하였다. 이 SBERT는 지금까지도 BERT가 적용될 수 없었던 분야인 특정의 새로운 task에 대해 사용 가능하게 만들었다. 이러한 task에는 큰 규모의 의미 유사도 비교, clustering, semantic search를 통한 정보 검색이 있다.

BERT는 여러 문장 분류 및 문장 쌍 회귀 task에서 새로운 SoTA performance를 보여줬다. BERT는 cross-encoder 구조를 사용한다: 두 개의 문장이 transformer network로 들어가고 target 값이 예측된다. 하지만, 이러한 셋업은 너무나 많은 가능한 계산량 때문에 다양한 쌍 회귀 task에 대해서는 부적합하다.

clustering과 semantic search를 해결하기 위한 가장 일반적인 방법은 각 문장을 비슷한 문장끼리 같은 벡터 공간으로 매핑하는 것으로 해결할 수 있다. 그래서 연구자들은 각각의 문장을 BERT에 입력으로 넣기 시작해서 고정된 크기의 sentence embedding을 얻어낼 수 있었다. 가장 흔하게 사용되는 방법은 BERT의 출력 레이어를 평균내거나 첫 번째 토큰((CLS token))의 출력을 사용하는 것이다. 실험에서도 밝힐 거지만 이러한 일반적인 방법들은 sentence embedding보다 안 좋은 결과를 보여주는데 GloVe embedding보다도 안 좋은 결과를 보여준다.

이러한 문제점을 완화하기 위해 SBERT가 개발되었다. siamese network architecture는 입력 문장으로 고정된 크기의 벡터가 얻어질 수 있도록 해주었다. 코사인 유사도 또는 Manhatten, Euclidean distance 같은 유사도 측정 방법을 사용하여 의미상으로 유사한 문장을 찾을 수 있었다. 이러한 유사도 측정은 현대의 하드웨어에서 극도로 효율적으로 수행될 수 있다. 이는 SBERT가 semantic similarity search 뿐만 아니라 clustering에도 사용될 수 있도록 해주었다. 10,000개의 문장 모음에서 가장 비슷한 문장 쌍을 찾는 task는 BERT를 사용했을 때 65시간이 걸렸지만, SBERT를 사용할 때는 겨우 5초 정도의 시간이 걸렸고, 코사인 유사도를 계산하는 데는 0.01초 정도의 시간이 걸렸다. 최적화된 인덱스 구조를 사용함으로써 가장 비슷한 Quora 질문을 찾는 task는 50시간에서 몇 밀리초로 줄어들게 되었다.

논문에서는 SBERT를 NLI dataset에서 fine-tune 하였는데, 이것은 기존의 SoTA setence embedding 이었던 InferSent와 Universal Sentence Encoder를 상당히 능가하는 sentence embedding을 생성한다. SBERT는 특정 task에 대해서 적응할 수도 있다. SBERT는 어려운 요소 유사도 데이터셋과 서로 다른 Wikipedia 문서에서 나온 문장을 구분하는 triplet 데이터셋에서 새로운 SoTA performance를 달성하였다.

2. Model

SBERT는 고정된 크기의 sentence embedding을 얻기 위해 BERT와 RoBERTa의 출력에 pooling 연산을 가하였다. pooling 연산으로는 다음의 3가지를 사용하여 실험을 진행하였다.

- CLS-토큰의 출력

- 모든 출력 벡터의 평균 계산((MEAN-Strategy))

- 출력 벡터의 최대 시간 계산((MAX-Strategy))

BERT와 RoBERTa를 fine-tune하기 위해 의미상으로 의미 있고 코사인 유사도와 비교될 수 있는 생성된 sentence embedding 같은 가중치를 업데이트하기 위한 simase & triplet network를 만들었다. 네트워크 구조는 training data에 따라서 변한다. 다음의 구조와 objective function을 사용하여 실험이 진행되었다.

Classification Objective Function. sentence embedding uu와 vv, element-wise 차이 |u−v||u−v|를 합치고 학습 가능 가중치 Wt∈R3n×k와 곱하였다.

o=softmax(Wt(u,v,|u−v|))

여기서 n은 sentence embedding의 차원이고, k는 라벨의 수이다. 이 경우에는 cross-entropy loss를 최적화하였다. 이 구조는 다음의 그림 1에 묘사되어 있다.

Regression Objective Function. 두 sentence embedding u와 v 간의 코사인 유사도가 계산된다(그림 2.). 평균 제곱 오차가 objective function으로 사용된다.

Triplet Objective Function. anchor 문장 a, 긍정 문장 p, 부정 문장 n이 주어지면 triplet loss는 네트워크가 a와 p 간의 거리가 a와 n의 거리보다 작도록 학습한다. 수학적으로 다음의 손실 함수를 최소화하였다.

max(||sa−sp||−||sa−sn||+ϵ,0)

sx 각각의 sentence embedding a/n/p와 거리 계산 공식 ||⋅||, 그리고 마진 ϵ을 사용하였다. 마진 ϵ은 sp가 sn 보다 sa에 최소한 ϵ 더 가깝게 있다는 것을 보장해준다. Euclidean distance를 사용하는 것처럼 ϵ=1로 설정하였다.

2-1. Training Details

논문에서는 SBERT를 SNLI와 Multi-Genre NLI 데이터셋의 조합에서 학습시켰다. 그리고 SBERT를 한 에폭에서 3가지 방법의 softmax 분류를 사용하여 fine-tune 하였다. 배치 크기로는 16, Adam optimizer로는 학습률 2e-5, 선형 학습률 warm-up은 학습 데이터의 10%로 하였다. 그리고 기본 pooling strategy는 MEAN이었다.

3. Evaluation - Semantic Textual Simialrity

논문에서는 SBERT의 성능을 STS task에 대해서 평가하였다. SoTA method는 종종 setence embedding을 similarity score에 매필하는 회귀 함수를 학습한다. 그러나 이러한 회귀 함수는 쌍으로 작동하며 조합 폭발로 인해 문장 모음이 특정 크기에 도달하면 확장할 수 없는 경우가 많다. 그 대신에, 두 sentence embedding 간의 유사도와 코사인 유사도를 항상 비교하였다. 그리고 또한 negative Manhatten과 Euclidean distance을 사용하여 실험을 진행하였는데 모든 방법의 결과 비슷하게 나타났다.

3-1. Unsupervised STS

논문에서는 STS 특정 학습 데이터를 사용하지 않고 STS에 대한 SBERT의 성능을 평가하였다. 피어슨 상관 계수 면에서는 STS가 잘 맞지 않음을 보여줬다. 그 대신에, sentence embedding과 gold label의 코사인 유사도 간의 Spearman's rank 상관도를 계산하였다. 이에 대한 결과는 다음의 표 1에 나타나 있다.

설명된 siamese network 구조와 fine-tuning 메커니즘을 사용하는 것은 상관도를 크게 향상시켜주고 InferSent와 Universal Sentence Encoder를 크게 능가하는 성능을 보여줬다. RoBERTa는 여러가지 supervised task에 대해서 성능을 향상시킬 수 있지만, sentence embedding을 생성하는 task에 대해서는 SBERT와 SRoBERTa가 사소한 차이를 보여줬다.

3-2. Supervised STS

STS 벤치마크는 supervised STS 시스템을 평가하는 유명한 데이터셋이다. BERT는 두 개의 문장을 네트워크에 흘려보내고 출력에 간단한 회귀 method를 사용함으로써 새로운 SoTA performance를 달성하였다. 논문에서는 training set를 사용해서 SBERT를 fine-tune 하였는데, 이때 regression objective function을 사용하였다. 예측을 할 때, sentence embedding 간의 코사인 유사도를 계산하였다. 모든 시스템들은 variance를 해결하기 위해 10개의 랜덤한 시드에서 학습되었다. 이에 대한 결과는 다음의 표 2에 나타나 있다.

4. Evaluation - SentEval

SentEval은 sentence embedding의 퀄리티를 평가하기 위한 툴킷이다. sentence embedding은 logistic regression 분류기를 위한 feature로 사용된다. logistic regression 분류기는 10-fold cross-validation 셋업에서 다양한 task에서 학습되었고 예측 정화도는 test-fold에 대해서 학습되었다.

SBERT sentence embedding의 목적은 다른 task를 위해 전이 학습으로 사용되지 않는 것이다. 논문에서는 기존 BERT 논문에서 처럼 새로운 task에 대해 BERT를 fine-tuning 하는 것은 BERT 네트워크의 모든 레이어를 업데이트하는 더욱 적합한 method이다. 하지만 SentEval은 다양한 task에 대해 sentence embedding의 퀄리티에 인상을 주고 있다.

논문에서는 SBERT sentence embedding을 7가지의 SentEval transfer task에 대해서 비교하였다. 결과는 다음의 표 3에 나타나 있다.

평균 BERT embedding 또는 BERT network로부터 나온 CLS-토큰을 사용하는 것은 다양한 STS task에 대해서 안 좋은 결과를 달성하고, 평균 GloVe embedding보다 떨어지는 성능을 보여준다. 하지만, SentEval에 대해서 평균 BERT embedding과 BERT CLS-토큰 출력은 평균 GloVe embedding을 넘어서는 괜찮은 결과를 보여줬다. 이에 대한 이유는 서로 다른 셋업 덕분이다. STS task에 대해서 코사인 유사도를 사용하여 sentence embedding 간의 유사도를 측정하려 했다. 코사인 유사도는 모든 차원을 동등하게 다룬다. 반대로, SentEval은 logistic regression 분류기를 sentence embedding에 적용하였다. 이것은 특정 차원이 분류 결과 크거나 작은 영향을 가질 수 있도록 하였다.

5. Computational Efficiency

sentence embedding은 잠재적으로 몇백만 개의 문장을 계산해야할 필요가 있다. 따라서 빠른 계산 속도가 요구된다. 이 섹션에서는 SBERT와 평균 GloVe embedding, InferSent, Universal Sentenve Encoder을 비교하였다. 그에 대한 결과는 다음의 표 4와 같다.

CPU에서는 InferSent가 SBERT보다 더 빠른 속도를 보여줬다. 이는 더욱 간단한 네트워크 architecture 때문이다. InferSent는 하나의 Bi-LSTM 레이어를 사용하는 반면에 BERT는 12개의 쌓여있는 transformer 레이어를 사용한다. 하지만, transformer 네트워크의 계산적 장점은 GPU에서 드러난다. 여기서 smart batching을 사용한 SBERT는 가장 빠른 속도를 보여줬다.

출처

https://arxiv.org/abs/1908.10084

Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks

BERT (Devlin et al., 2018) and RoBERTa (Liu et al., 2019) has set a new state-of-the-art performance on sentence-pair regression tasks like semantic textual similarity (STS). However, it requires that both sentences are fed into the network, which causes a

arxiv.org